I'm currently working on a deep learning project involving DICOM images. Long story short, in this project I have X-ray images of human pelvises and I'm trying to predict if there are some pathological changes on the hip joint (for example: cysts, osteophytes, sclerotisation, ...).

One of my problems is that the data was gathered from different hospitals and it has different properties (distributions). My focus was mostly on:

Modality- I have Computed Radiography (CR) and Digital Radiography (DX) X-raysPhotometric Interpretation- I have X-rays saved inRGB,MONOCHROME1andMONOCHROME2

I have 3 main groups of images:

If I understand correctly I can't really do anything with Modality but in case of Photomertic Interpretation I've changed RGB X-rays to grayscale, unitarized all of them, and inverse MONOCHRMOE1 pixel values:

pixel_data = dicom.pixel_array

pi = dicom['PhotometricInterpretation'].value

if pi == 'RGB':

pixel_data = rgb2gray(pixel_data)

pixel_data = (pixel_data - pixel_data.min()) / (pixel_data.max() - pixel_data.min())

if pi == 'MONOCHROME1':

pixel_data = np.abs(1 - pixel_data)

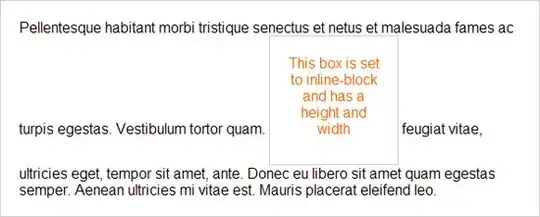

After that I've applied CLAHE algorithm to each of them. 3 sample images (CR-RGB, DX-MONO2, CX-MONO1) before and after preprocessing looks like that:

Last step before modeling is to cut hip joints from the X-rays, becouse all the changes that I'm trying to predict are located in small region, so that I don't need whole X-ray (I'm planning to build localization model for finding bounding boxes of the hip joint and classification models for the changes on top of that). 3 sample hip joints (CR-RGB, DX-MONO2, CX-MONO1) after cutting looks like:

My questions are:

- Is there anything wrong with my preprocessing steps ?

- Should I maybe add something to preprocessing ?

It's my first time working with DICOMs and any help would be appreciated.