Thanks in advance,

I am having a simple Simulink model, that takes in a 32-bit number in the IEEE-754 format and adds the same number, which gives the output again in the 32-bit wide IEEE-754 format. I used MATLAB's HDL CODER add-on and generated the Verilog HDL code for the same. When I wrote a testbench for the same, I found the latency I get from this code is 100ns. But is there a way I can reduce this to even further, say some 10ns.

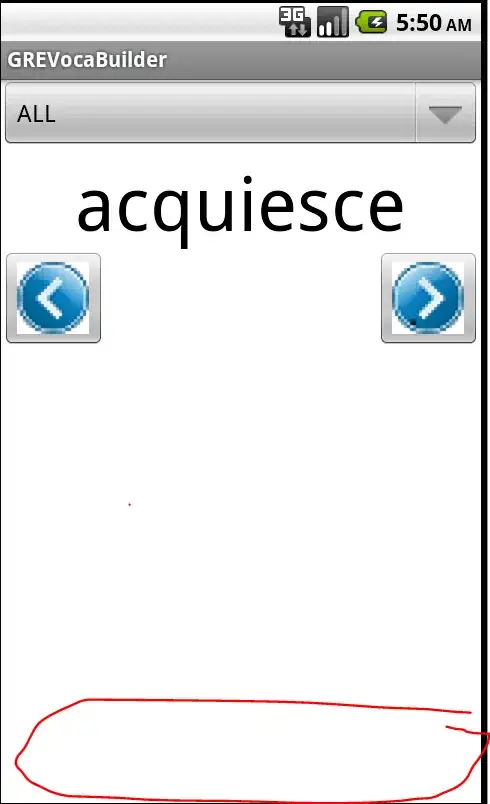

Below I am attaching the Simulink model I used to generate the Verilog HDL code, along with the generated Verilog files. Also, I am attaching a screenshot of the simulation in case you don't want to waste your time running the scripts