I am using spark to join a static dataset I fetch from azure storage and streaming dataset I get from eventhub. I have not used broadcast join anywhere. I tried df.explain() after joining, it shows sortmerge join is happening. I am not sure why I am getting error related to Broadcast Hash join.

java.lang.OutOfMemoryError: Not enough memory to build and broadcast the table to all worker nodes. As a workaround, you can either disable broadcast by setting spark.sql.autoBroadcastJoinThreshold to -1 or increase the spark driver memory by setting spark.driver.memory to a higher value

...

...

Exception in thread "spark-listener-group-shared" java.lang.OutOfMemoryError: Java heap space

...

...

does spark broadcast everything it gets from event hubs?

This is how my program looks like

//##read stream from event hub

process(stream)

def process(stream: DataFrame){

val firstDataSet = getFirstDataSet()

firstDataSet.persist()

val joined = stream.join(

firstDataSet,

stream("joinId") === firstDataSet("joinId")

)

//##write joined to event hub

}

def getFirstDataSet(){

//##read first from azure storage

val firstDataSet = first.filter(

condition1 &&

condition 2

)

}

update: It looks like JVM Out of Memory error and not related to broadcast. https://issues.apache.org/jira/plugins/servlet/mobile#issue/SPARK-24912

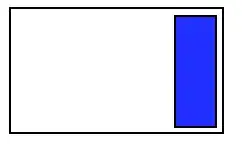

I tried to check the driver and executor heap usage after GC using gceasy.io:

Executor looked good

Driver memory consumption after GC looks constantly increasing

Driver memory consumption after GC looks constantly increasing

I analyzed heap dump and here are top 15 entries during Out Of Memory:

Looks like char array is accumulating in the driver. I am not sure what might be causing it to accumulate the char array.