I'm writing a ray tracer (mostly for fun) and whilst I've written one in the past, and spent a decent amount of time searching, no tutorials seem to shed light on the way to calculate the eye rays in a perspective projection, without using matrices.

I believe the last time I did it was by (potentially) inefficiently rotating the eye vectors x/y degrees from the camera direction vector using a Quaternion class. This was in C++, and I'm doing this one in C#, though that's not so important.

Pseudocode (assuming V * Q = transform operation)

yDiv = fovy / height

xDiv = fovx / width

for x = 0 to width

for y = 0 to height

xAng = (x / 2 - width) * xDiv

yAng = (y / 2 - height) * yDiv

Q1 = up vector, xAng

Q2 = camera right vector, yAng

Q3 = mult(Q1, Q2)

pixelRay = transform(Q3, camera direction)

raytrace pixelRay

next

next

I think the actual problem with this is that it's simulating a spherical screen surface, not a flat screen surface.

Mind you, whilst I know how and why to use cross products, dot products, matrices and such, my actual 3D mathematics problem solving skills aren't fantastic.

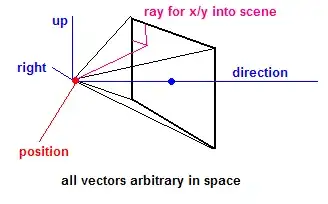

So given:

- Camera position, direction and up-vector

- Field of view

- Screen pixels and/or sub-sampling divisions

What is the actual method to produce an eye ray for x/y pixel coordinates for a raytracer?

To clarify: I exactly what I'm trying to calculate, I'm just not great at coming up with the 3D math to compute it, and no ray tracer code I find seems to have the code I need to compute the eye ray for an individual pixel.