It looks like the tempdisagg package doesn't allow for monthly to daily disaggregation. From the td() help file 'to' argument:

high-frequency destination frequency as a character string ("quarterly" or "monthly") or as a scalar (e.g. 2, 4, 7, 12). If the input series are ts objects, the argument is necessary if no indicator is given. If the input series are vectors, to must be a scalar indicating the frequency ratio.

Your error message "'to' argument: unknown character string" is because the to = argument only accepts 'quarterly' or 'monthly' as strings.

There is some discussion about disaggregating monthly data to daily on the stats stackexchage here: https://stats.stackexchange.com/questions/258810/disaggregate-monthly-forecasts-into-daily-data

After some searching, it looks like nobody consistently using disaggregated monthly to daily data. The tempdisagg package seems to be capable of what most others have found to be possible -- yearly to quarterly or monthly, and time periods that are consistent even multiples.

Eric, I've added a script below that should illustrate what you're trying to do, as I understand it.

Here we use real pricing data to move from daily prices -> monthly prices -> monthly returns -> average daily returns.

library(quantmod)

library(xts)

library(zoo)

library(tidyverse)

library(lubridate)

# Get price data to use as an example

getSymbols('MSFT')

#This data has more information than we want, remove unwanted columns:

msft <- Ad(MSFT)

#Add new column that acts as an 'indexed price' rather than

# actual price data. This is to show that calculated returns

# don't depend on real prices, data indexed to a value is fine.

msft$indexed <- scale(msft$MSFT.Adjusted, center = FALSE)

#split into two datasets

msft2 <- msft$indexed

msft$indexed <- NULL

#msft contains only closing data, msft2 only contains scaled data (not actual prices)

# move from daily data to monthly, to replicate the question's situation.

a <- monthlyReturn(msft)

b <- monthlyReturn(msft2)

#prove returns based on rescaled(indexed) data and price data is the same:

all.equal(a,b)

# subset to a single year

a <- a['2019']

b <- b['2019']

#add column with days in each month

a$dim <- days_in_month(a)

a$day_avg <- a$monthly.returns / a$dim ## <- This must've been left out

day_avgs <- data.frame(day_avg = rep(a$day_avg, a$dim))

# daily averages timesereis from monthly returns.

z <- zoo(day_avgs$day_avg,

seq(from = as.Date("2019-01-01"),

to = as.Date("2019-12-31"),

by = 1)) %>%

as.xts()

#chart showing they are the same:

PerformanceAnalytics::charts.PerformanceSummary(cbind(a$monthly.returns, z))

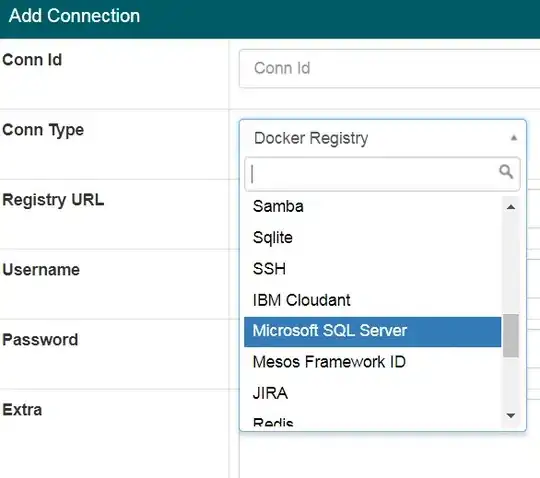

Here are three charts showing 1. monthly returns only, 2. daily average from monthly returns, 3. both together. As they are identical, overplotting in the third image shows only one.