I'm new to the Databricks, need help in writing a pandas dataframe into databricks local file system.

I'm new to the Databricks, need help in writing a pandas dataframe into databricks local file system.

I did search in google but could not find any case similar to this, also tried the help guid provided by databricks (attached) but that did not work either. Attempted the below changes to find my luck, the commands goes just fine, but the file is not getting written in the directory (expected wrtdftodbfs.txt file gets created)

df.to_csv("/dbfs/FileStore/NJ/wrtdftodbfs.txt")

Result: throws the below error

FileNotFoundError: [Errno 2] No such file or directory: '/dbfs/FileStore/NJ/wrtdftodbfs.txt'

df.to_csv("\\dbfs\\FileStore\\NJ\\wrtdftodbfs.txt")

Result: No errors, but nothing written either

df.to_csv("dbfs\\FileStore\\NJ\\wrtdftodbfs.txt")

Result: No errors, but nothing written either

df.to_csv(path ="\\dbfs\\FileStore\\NJ\\",file="wrtdftodbfs.txt")

Result: TypeError: to_csv() got an unexpected keyword argument 'path'

df.to_csv("dbfs:\\FileStore\\NJ\\wrtdftodbfs.txt")

Result: No errors, but nothing written either

df.to_csv("dbfs:\\dbfs\\FileStore\\NJ\\wrtdftodbfs.txt")

Result: No errors, but nothing written either

The directory exists and the files created manually shows up but pandas to_csv never writes nor error out.

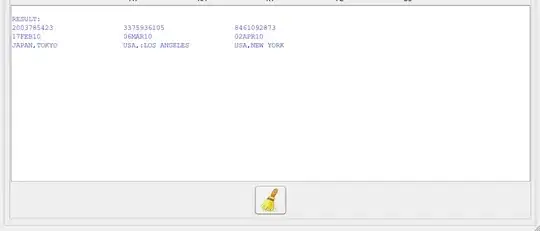

dbutils.fs.put("/dbfs/FileStore/NJ/tst.txt","Testing file creation and existence")

dbutils.fs.ls("dbfs/FileStore/NJ")

Out[186]: [FileInfo(path='dbfs:/dbfs/FileStore/NJ/tst.txt', name='tst.txt', size=35)]

Appreciate your time and pardon me if the enclosed details are not clear enough.