I am having trouble understanding the RCF algorithm, particularly how it expects / anticipates data or the pre-processing that should be completed? For example, I have the following data/features (with example values) for about 500K records):

The results of my RCF model (trained on 500K records for 57 features - amount, 30 countries dummied, and 26 categories dummied) is extremely focused on the amount feature (e.g., all anomalies are above approx. 1000.00 which absolutely no variation based on the country or type).

Also, I also normalized the amount field and the results for that are also not really that strong. In fact, its safe to say I the results are terrible and I am clearly missing something with this.

Overall, I am looking for some guidance on getting the features right (again - 1 amount field and 2 fields that are categorical and dummied 1 and 0 - resulting in about 57 fields). Im wondering if I am better off with something like kmeans.

EDIT: Some context here... I am wondering:

1) Weighting - Is there is a way to give weight to certain variables (i.e., one of the categorical variables is more important than the other). For example, I am using Country and Category as key attributes and want to give more weight to Category over Country.

2) Context - How can I ensure outliers are considered in context of its peers (the categorical data)? For example, a transaction of $5000 for an "airfare" expense is not an outlier for that category but would be for any other. I could create N numbers of models, but that would get messy and cumbersome, right?

I looked through most of the available documentation (https://docs.aws.amazon.com/sagemaker/latest/dg/rcf_how-it-works.html) and cannot find anything that describes this!

Thank you so much for your help in advance!

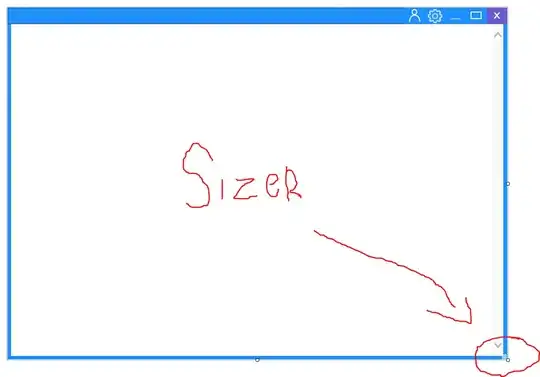

EDIT: Not sure its critical at this point where I dont even have semi-reasonable results, but I have used the following hyperparameters:

num_samples_per_tree=256,

num_trees=100