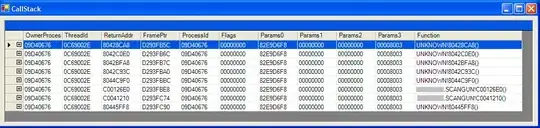

I am training a reinforcement learning agent on an episodic task of fixed episode length. I am tracking the training process by plotting the cumulative rewards over an episode. I am using tensorboard for plotting the rewards. I have trained my agent for 20M steps. So I believe the agent has been given enough time to train. The cumulative rewards for an episode can range from +132 to around -60. My plot with a smoothing of 0.999

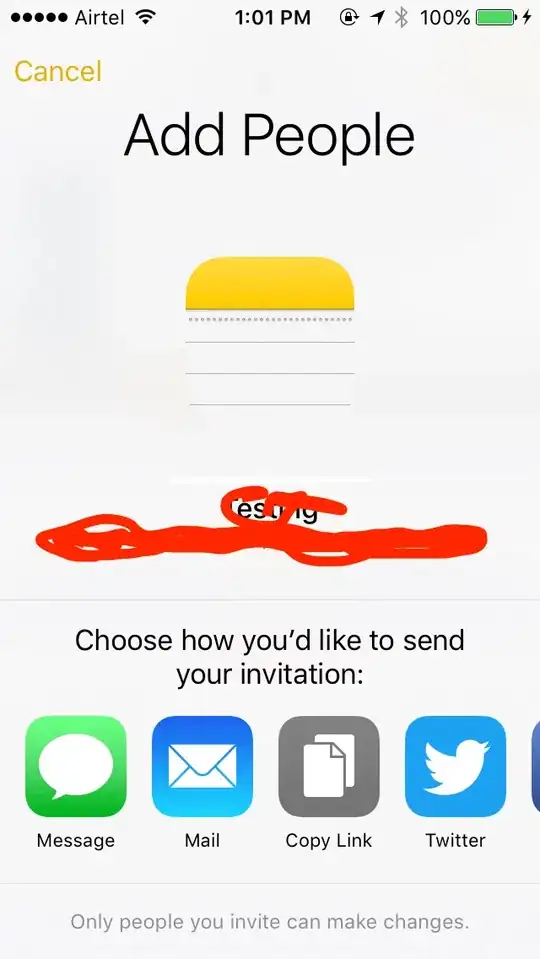

Over the episodes, I can see that my rewards have converged. But if I see the plot with smoothing of 0

There is a huge variation in the rewards. So should I consider that the agent has converged or not? Also I don't understand why is there such a huge variation in rewards even after so much of training?

Thanks.