I have a spark streaming job that reads and processes data from the solace queue. I want to set an alert on it if no data is consumed in last one hour. Currently, I have set a batch window as 1 minute. How can add an alert if no data is consumed continuously for an hour so that source can be notified?

-

Do you want to do it on Pyspark or Solace? – pissall Nov 27 '19 at 09:59

-

I want to handle it in my spark code. I have a mailing service which can send the notification, I just need to know when can I send it? What should I write in foreachRDD to take care of no data reported? – Jyoti Dhiman Nov 27 '19 at 10:15

-

You can do something like this pseudo-code `stream_rdd.foreach(lambda x: do_something() if x.isEmpty() else pass)`. Not sure it will work, but I think the answer lines in something like this – pissall Nov 27 '19 at 10:28

-

1@pissall if I add a check on isEmpty(), it will only check if the no of rows is empty in the current batch(of 1 minute) not continuously for an hour? – Jyoti Dhiman Nov 27 '19 at 11:25

2 Answers

You have several options to do that:

Add your implementation of a

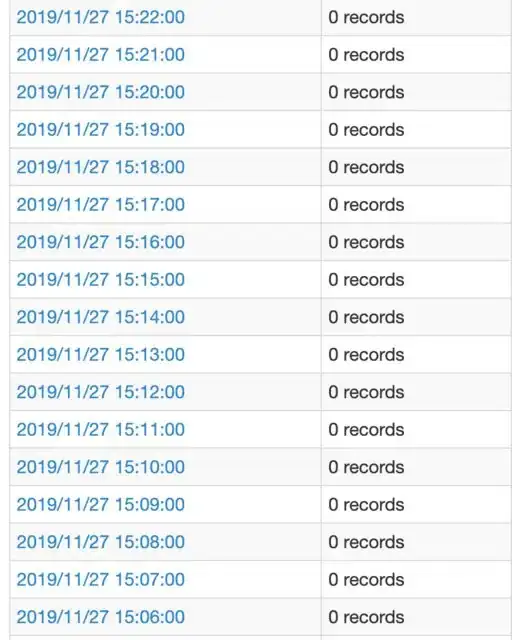

StreamListener(API) to the stream you are subscribed to. Then, override theonBatchCompletedto access theBatchInfothat will give you the batch time and size. With that info, you can track what happens and raise an alarm if no data has been received during a specific period.You also can use the REST api provided for monitoring, described here. You check it from the outside and raise the alarm if needed. For instance, it might be helpful to check

/applications/[app-id]/streaming/batches

- 2,450

- 2

- 23

- 54

You can keep track of it by saving the timestamp of the last received record in a hdfs file. And then in while processing micro-batch, if rdd is empty and the difference in current timestamp and timestamp in hdfs is more than an hour you can send a mail using your mailing service. If you receive some records in your micro batch you can update the timestamp in hdfs file accordingly.

Your code will look something like below where you need to implement getTimeStampFromHDFS() which will return timestamp in your hdfs file and updateTimestampHDFS(currentTimestamp) in which you will update the timestamp when you received record in your micro batch.

dstream.foreachRDD{rdd =>

if(rdd.isEmpty) {

if((System.currentTimeMillis - getTimeStampFromHDFS()) / (1000 * 60 * 60) >= 1) sendMailAlert()

}

else {

updateTimestampHDFS(System.currentTimeMillis)

}

}

- 807

- 6

- 9