When ZSTD first came out it also took a while to be added to the analyze compression command.

ZSTD can be used on any datatype although some won't benefit from it as much as others. You can naively apply it to everything and it works fine.

AZ64 can only be applied to these datatypes:

SMALLINT

INTEGER

BIGINT

DECIMAL

DATE

TIMESTAMP

TIMESTAMPTZ

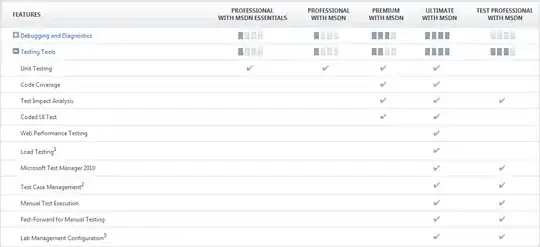

I ran an experiment to test the compression factor. I was surprised to discover it doesn't always make things smaller.

Steps

- Generated the

create table DDL for the original table

- Changed the name of the table and the encoding for valid columns

- created the table Inserted into the new table from the old table ran

VACUUM FULL <tablename> TO 99 PERCENT for both old and new table- ran

ANALYZE <tablename> for both old and new table

Query I used to check column sizes borrowed from https://stackoverflow.com/a/33388886/1335793

Results

- The

id column is a primary key, so has a very large cardinality, perhaps that helps?

- The sort_order column has values in the range 0-50 with more values closer to 0

- The created_at timestamp ranges over many years with more data in recent times

- completed_step is similar to sort order but the median is closer to 0

Edit: I haven't done any performance comparison so this is only part

of the story. Overall the size of the table is smaller, even if some fields weren't.