I have created a simple Chebyshev low pass filter based on coefficients generated by this site: http://www-users.cs.york.ac.uk/~fisher/mkfilter/, which I am using to filter out frequencies above 4kHz in an 16kHz sample rate audio signal before downsampling to 8kHz. Here's my code (which is C#, but this question is not C# specific, feel free to use other languages in different languages).

/// <summary>

/// Chebyshev, lowpass, -0.5dB ripple, order 4, 16kHz sample rte, 4kHz cutoff

/// </summary>

class ChebyshevLpf4Pole

{

const int NZEROS = 4;

const int NPOLES = 4;

const float GAIN = 1.403178626e+01f;

private float[] xv = new float[NZEROS+1];

private float[] yv = new float[NPOLES + 1];

public float Filter(float inValue)

{

xv[0] = xv[1]; xv[1] = xv[2]; xv[2] = xv[3]; xv[3] = xv[4];

xv[4] = inValue / GAIN;

yv[0] = yv[1]; yv[1] = yv[2]; yv[2] = yv[3]; yv[3] = yv[4];

yv[4] = (xv[0] + xv[4]) + 4 * (xv[1] + xv[3]) + 6 * xv[2]

+ (-0.1641503452f * yv[0]) + (0.4023376691f * yv[1])

+ (-0.9100943707f * yv[2]) + (0.5316388226f * yv[3]);

return yv[4];

}

}

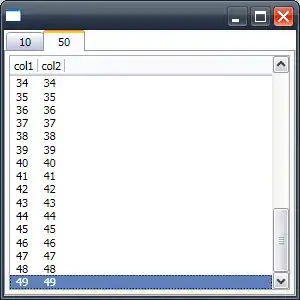

To test it I created a sine wave "chirp" from 20Hz to 8kHz using Audacity. The test signal looks like this:

After filtering it I get:

The waveform shows that the filter is indeed reducing the amplitude of frequencies above 4kHz, but I have a load of noise added to my signal. This seems to be the case whichever of the filter types I try to implement (e.g. Butterworth, Raised Cosine etc).

Am I doing something wrong, or do these filters simply introduce artefacts at other frequencies? If I downsample using the naive approach of averaging every pair of samples, I don't get this noise at all (but obviously the aliasing is much worse).