When I read from an mdf (.mf4) file with asammdf (Python 3.7), as soon as the sample count crosses a barrier, the timestamps start counting up from close to 0 again, like an overflow :for 3 files exactly at 29045 lines, one file at 27234 lines for some reason. This means that when I use methods like resample or to_dataframe that the interpolation that occurs during these methods royally screws up and I get botched data.

I haven't found anything of the sort in the documentation, and there's hardly any resources besides the doc. I thought this might be related to chunk size or memory allocation, but I can't figure out what to do differently or why exactly this happens.

Right now I read it via the standard method

mdf = MDF(file)

I wrote up a small script to read in a file and plot the difference between the converted (interpolated) data and the original datapoints to showcase what I mean:

import tkinter as tk

from tkinter import filedialog as fd

from asammdf import MDF

from matplotlib import pyplot as plt

typeStr = '*.mf4'

root = tk.Tk()

root.wm_attributes('-topmost', 1)

root.withdraw()

files = fd.askopenfilenames(parent=root,filetypes=[("Measurement MF4 file",typeStr)])

for file in files:

mdf = MDF(file)

# conversion to pandas

data = mdf.to_dataframe()

data['Time [s]'] = list(data.index)

columns = data.columns.tolist()

columns.remove('Time [s]')

columns.insert(0,'Time [s]')

data = data[columns]

plt.plot(data['Time [s]'], data[columns[1]],label="converted")

# original data

chData = mdf.get(columns[1])

plt.plot(chData.timestamps, chData.samples, label="original")

plt.legend()

plt.show()

an example file can be accessed here:

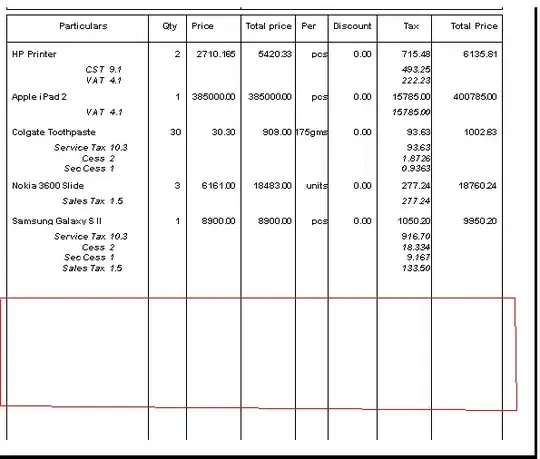

an example of a plot: