versions: Python 3.6.9, Tensorflow 2.0.0, CUDA 10.0, CUDNN 7.6.1, Nvidia driver version 410.78.

I'm trying to port a LSTM-based Seq2Seq tf.keras model to tensorflow 2.0

Right now I'm facing the following error when I try to call predict on the decoder model (see below for the actual inference setup code)

It is as if it were expecting a single word as argument, but I need it to decode a full sentence (my sentences are right-padded sequences of word indices, of length 24)

P.S.: This code used to work exactly as it is on TF 1.15

InvalidArgumentError: [_Derived_] Inputs to operation while/body/_1/Select_2 of type Select must have the same size and shape.

Input 0: [1,100] != input 1: [24,100]

[[{{node while/body/_1/Select_2}}]]

[[lstm_1_3/StatefulPartitionedCall]] [Op:__inference_keras_scratch_graph_45160]

Function call stack:

keras_scratch_graph -> keras_scratch_graph -> keras_scratch_graph

FULL MODEL

ENCODER inference model

DECODER inference model

Inference Setup (line where error actually happens)

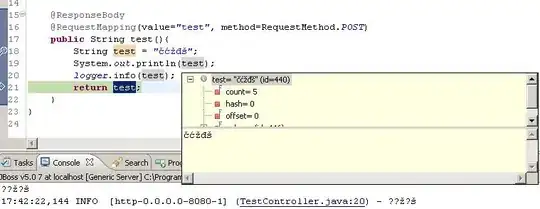

Important information: sequences are right-padded to 24 elements and 100 is the number of dimensions for each word embedding. This is why the error message (and the prints) show that the input shapes are (24,100).

note that this code runs on a CPU. running it on a GPU leads to another error detailed here

# original_keyword is a sample text string

with tf.device("/device:CPU:0"):

# this method turns the raw string into a right-padded sequence

query_sequence = keyword_to_padded_sequence_single(original_keyword)

# no problems here

initial_state = encoder_model.predict(query_sequence)

print(initial_state[0].shape) # prints (24, 100)

print(initial_state[1].shape) # (24, 100)

empty_target_sequence = np.zeros((1,1))

empty_target_sequence[0,0] = word_dict_titles["sos"]

# ERROR HAPPENS HERE:

# InvalidArgumentError: [_Derived_] Inputs to operation while/body/_1/Select_2 of type Select

# must have the same size and shape. Input 0: [1,100] != input 1: [24,100]

decoder_outputs, h, c = decoder_model.predict([empty_target_sequence] + initial_state)

Things I have tried

disabling eager mode (this just made training much slower and the error during inference stayed the same)

reshaping the input prior to feeding it to the predict function

manually computing (

embedding_layer.compute_mask(inputs)) and setting masks when calling the LSTM layers