One can not say what the components of a pixel represent. Or at least not with certainty. It depends on the color space [wiki] you select.

In order to store a color, you need to represent that color. There are several ways to do that. A common way to do that is with the RGB color model [wiki]. Where one uses three channels: one for Red, one for Green and one for Blue. This model is based on the assumption that the human eye has three kinds of light receptors, for each of these colors. It is common in for monitors as well, since a computer monitor has three sorts of subpixels each to render one of the color channels. Sometimes an extra channel Alpha is added, making it an ARGB color scheme. The alpha channel then describes the level of transparency of that pixel. This is useful if you for example want to add one image over another one, and some parts of the image.

Another color system is the HSL color system, where the color space is seen as a cylindrical shape one, and the three attribute Hue, Saturation, and Lightness describe the angle, radius and height in the cylinder respectively. This is contrast to an RGB color system, that can be seen as a cube.

For printing purposes, often the CMYK color model is used: with a channel for Cyan, Magent, Yellow and Black. These are often the ink cardridges in a basic printer.

In short, you thus can not tell what the color scheme is. According to numpy, this is just a 4×4×3 or 4×4×4 array. It is only by interpreting the numbers, for example according to a color scheme, that we can make sense out of it.

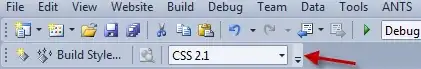

OpenCV has a function to convert one color scheme into another. As you can see, it supports a large range of conversions. It has extensive documentation on the color schemes as well.