I have a one business scenario , we have to pull all the tables from the one database let say adventure-work and put all the tables information in separate csv in data lake. suppose in adventure works db if we have 20 tables I need to pull all the table paralleling and each table contains one csv i.e 20 tables will contain 20 csv in azure data lake. How to do using Azure data factory.Kindly don't use for-each activity it takes files sequentially and time consuming.

Asked

Active

Viewed 46 times

-1

-

1the sequentially is a boolean variable. When you set it to false, you'll have jobs in parallel – Thiago Custodio Nov 15 '19 at 21:56

-

Hello Thiago thanks for your reply but I didn't get you do you have any screen shot or link to perform this acitivity. – Anuj gupta Nov 17 '19 at 06:43

-

here it is https://learn.microsoft.com/en-us/azure/data-factory/copy-activity-performance#parallel-copy – Thiago Custodio Nov 17 '19 at 16:00

-

If my answer is helpful for you, you can accept it as answer( click on the check mark beside the answer to toggle it from greyed out to filled in.). This can be beneficial to other community members. Thank you. – Leon Yue Nov 19 '19 at 01:44

1 Answers

0

In Data Factory, there are two ways can help you create 20 csv files from 20 tables in one pipeline: for-each activity and Data Flow.

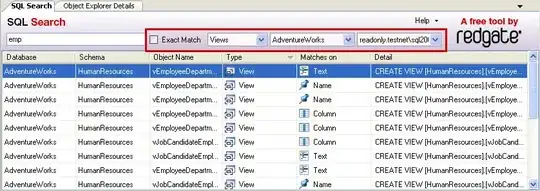

In Data Flow , add 20 Sources and Sink, for example:

No matter which way, the copy active must be sequentially and take some time.

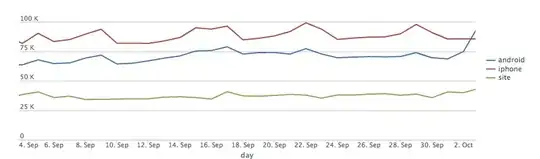

What you should do is to think about how to improve the copy data performance like Thiago Gustodio said in comment, it can help your same the time.

For example, set more DTUs of your database, using more DIU for your copy active.

Please reference these Data Factory documents:

They all provide performance supports for you.

Hope this helps.

Leon Yue

- 15,693

- 1

- 11

- 23