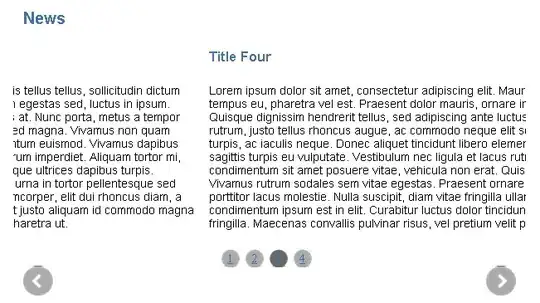

I'm using vispy to render 3D parametric surfaces with OpenGL. It seems to be working well for the most part but it looks weird

in some parts around the edges of the geometry, almost as if the normals were pointing inwards (example).

I suspect the problem lies either with my fragment shader or the surface normals, especially in areas where the they are close to perpendicular to the camera direction. Here's how the vertices are created:

# --- Python imports ---

import numpy as np

# --- Internal imports ---

from glmesh import MeshApp3D

# -----------------------------------------------------------

# Torus parameters

radius1 = 1.0

radius2 = 0.2

# Define torus

torus = ( lambda u,v : np.cos(u)*(radius1+radius2*np.cos(v)),

lambda u,v : np.sin(u)*(radius1+radius2*np.cos(v)),

lambda u,v : radius2*np.sin(v) )

# Generate grid of points on the torus

nSamples = 100

U = np.linspace(0.0,2*np.pi,nSamples)

V = np.linspace(0.0,-2*np.pi,nSamples)

grid = np.array(

[

[ [torus[componentIndex](u,v) for u in U] for v in V ] for componentIndex in (0,1,2)

],

dtype=np.float32 )

# -----------------------------------------------------------

# Rearrange grid to a list of points

vertices = np.reshape(

np.transpose( grid,

axes=(1,2,0)),

( nSamples*nSamples ,3))

# Generate triangle indices in a positive permutation

# For example:

# 7---8---9

# | / | / |

# 4---5---6

# | / | / |

# 1---2---3

#

# (1,5,4) (1,2,5) (2,6,5) (2,3,6) (4,8,7) (4,5,8) (5,9,8) (5,6,9)

faces = np.zeros( ( 2*(nSamples-1)*(nSamples-1), 3 ), dtype=np.uint32 )

k = 0

for j in range(nSamples-1):

for i in range(nSamples-1):

jni = j*nSamples+i

faces[k] = [ jni,

jni+nSamples+1,

jni+nSamples ]

faces[k+1] = [ jni,

jni+1,

jni+1+nSamples ]

k+=2

# -----------------------------------------------------------

# -----------------------------------------------------------

meshApp = MeshApp3D( {'vertices' : vertices, 'faces' : faces},

colors=(1.0,1.0,1.0) )

meshApp.run()

The fragment shader:

varying vec3 position;

varying vec3 normal;

varying vec4 color;

void main() {

// Diffuse

vec3 lightDir = normalize( position - vec3($lightPos) );

float diffuse = dot( normal, lightDir );

//diffuse = min( max(diffuse,0.0), 1.0 );

// Specular

vec3 halfWayVector = normalize( ( lightDir+vec3($cameraDir) )/2.0 );

float specular = min( max( dot(halfWayVector,normal) ,0.0), 1.0 );

specular = specular * specular * specular * specular;

specular = specular * specular * specular * specular;

specular = specular * specular * specular * specular;

// Additive

vec3 fragColor = vec3($lightColor) * color.xyz;

fragColor = fragColor * ( $diffuseMaterialConstant * diffuse +

$specularMaterialConstant * specular)

+ $ambientMaterialConstant * vec3($ambientLight) * color.xyz;

gl_FragColor = vec4(fragColor,1.0);

}

The rest of the code is broken down in modules but in a nutshell, here's what happens:

- a MeshData is constructed from the vertices and faces

- the normals are generated in MeshData internally, based on the vertex positions and the order in which they define their corresponding triangles

- a canvas and an OpenGL window is created and initialized

- the mesh is drawn as triangle strips

A problematic part can be the definition of the mesh triangles. Example:

7---8---9

| / | / |

4---5---6

| / | / |

1---2---3

v

^

|

---> u

(1,5,4) (1,2,5) (2,6,5) (2,3,6) (4,8,7) (4,5,8) (5,9,8) (5,6,9)

It was originally meant to generate triangles for 2D functions z=f(x,y) and worked well for them, but I ran into problems when applying it to parametric surfaces r(u,v) = [ x(u,v), y(u,v), z(u,v) ]. For example, this is the reason why the parameter v goes from 0 to -2pi instead of +2pi (otherwise the normals would be pointing inwards).

Any ideas on what might be causing the glitches seen in the image?

(or any advice on better triangle generation?)