I'm working my way through LDA models for text analysis; I've heard that the Mallet implementation is the best. However, it seems to generate very poor results when I compare it with the Gensim version, so I think I may be doing something wrong. Can anyone explain the discrepancy?

import gensim

from gensim.corpora.dictionary import Dictionary

import gensim.corpora as corpora

from gensim.utils import simple_preprocess

from gensim.models import CoherenceModel

import pyLDAvis

import pyLDAvis.gensim

## Generate a toy corpus:

dog = list(np.repeat('dog', 500)) + list(np.repeat('cat', 20)) + list(np.repeat('bird', 20))

cat = list(np.repeat('dog', 20)) + list(np.repeat('cat', 500)) + list(np.repeat('bird', 20))

bird = list(np.repeat('dog', 20)) + list(np.repeat('cat', 20)) + list(np.repeat('bird', 500))

texts = [dog, cat, bird]

id2word = corpora.Dictionary(texts)

corpus = [id2word.doc2bow(i) for i in texts]

### Gensim model

lda_model = gensim.models.ldamodel.LdaModel(corpus = corpus,

id2word=id2word,

num_topics=3,

random_state=100,

update_every=1,

chunksize=100,

passes=10,

alpha='auto',

per_word_topics=True)

pyLDAvis.enable_notebook()

vis = pyLDAvis.gensim.prepare(lda_model, corpus, id2word)

vis = pyLDAvis.prepared_data_to_html(vis)

with open("LDA_output.html", "w") as file:

file.write(vis)

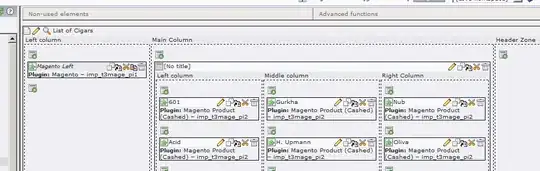

This gives the following plausible inference of topics:

However, things work very differently for the Mallet implementation:

mallet_path = '/mallet-2.0.8/bin/mallet'

ldamallet = gensim.models.wrappers.LdaMallet(mallet_path, corpus=corpus, num_topics=3, iterations=1000, workers = 4, id2word=id2word)

model = gensim.models.wrappers.ldamallet.malletmodel2ldamodel(ldamallet)

pyLDAvis.enable_notebook()

vis = pyLDAvis.gensim.prepare(model, corpus, id2word)

vis = pyLDAvis.prepared_data_to_html(vis)

with open("LDA_output.html", "w") as file:

file.write(vis)

Here, there is very little difference between the topics that the model infers.

Now, it seems to me that I'm making an elementary blunder here--possibly by not specifying a relevant model parameter the correct way. However, I'm baffled as to what that might be. I'd be grateful for any advice!