I am receiving the result of an API call, make some transformations, and store it in S3, now it stores 1 file for each api call. Resulting in a LOT of files, the flow is:

invokeHTTP->Split.json->JoltTransformJSON (I don't need all the data)->EvaluateJsonPath->InferAvroScheme (500 samples)->ConvertJSONToAvro->PutS3Object

The json format is:

"data": {"value1": "test", "value2": "test2"},

"actions": [{"buy": 5, "sell": 6},{"buyAgain": 5, "sellAgain": 6}],

"Reactions": [{"buy": 5, "sell": 6}],

{"otherValue": "1",

"otherValue2": "2"}

sometimes actions have values inside, in other casos "actions":[] I drop Reactions using JoltTransformJSON with remove parameter, it have a LOT of data I don't need

To join the values I tried MergeContent, but it DROPs a lot of records, first I read the possible configurations, then...I start modifying parameters just to see how it changes the output, It always DROP a lot of records.

So now I'm storing 1 file per json in S3, thats a lot of files and you can feel it when querying the data.

How can I improve the flow to store less files? Thank you!

---- EDIT: image added ----

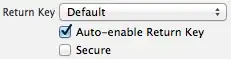

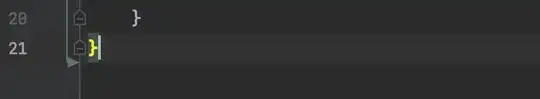

Current MergeContents configuration, don't quite understand Attribute strategy Property. Can this fix the changes in schema? (actions with value or "actions":[])

---- EDIT 2 ---- Now I can confirm that is grouping by state as expected but dropping the JSON flows that have "actions" : [], they have the same state as some of the flows with that field full, any ideas? thanks!