I have gone through the paper Attention is all you need and though I think I understood the overall idea behind what is happening, I am pretty confused with the way the input is being processed. Here are my doubts, and for simplicity, let's assume that we are talking about a Language translation task.

1) The paper states that the input embedding is of dimension 512, that would be the embedding vector of each word in the input sentence right? So if the input sentence is of length 25, then the input would be a 25*512 dimension matrix at each layer?

2) Does this model use a fixed "MAX_LENGTH" across all its batches? By this, I mean identify the longest sentence in your training set and pad all the other sentences to be equal to the MAX_LENGTH?

3) If the 2nd question does indeed use a concept of MAX_LENGTH, how does one process a test time query of length greater than the input query?

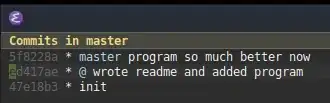

I have also referred to this video to get a better understanding https://www.youtube.com/watch?v=z1xs9jdZnuY and one of the frames that gives an overall idea of one single layer with 3 multi head attentions is this

here you can see that the input is of dimension 4*3(for simple representation the embedding size is 3 and the final output of one layer of attention and the Feed forward network is also 4*3).