I have a problem when I search for optimal hyperparameters of xgboost using mlr package in R, using Random Search method, on Ubuntu 18.04. This is the setup code for the search:

eta_value <- 0.05

set.seed(12345)

# 2. Create tasks

train.both$y <- as.factor(train.both$y) # altering y in train.both!

traintask <- makeClassifTask(data = train.both,target = "y")

# 3. Create learner

lrn <- makeLearner("classif.xgboost",predict.type = "prob")

lrn$par.vals <- list(

objective="binary:logistic",

booster = "gbtree",

eval_metric="auc",

early_stopping_rounds=10,

nrounds=xgbcv$best_iteration,

eta=eta_value,

weight = train_data$weights

)

# 4. Set parameter space

params <- makeParamSet(

makeDiscreteParam("max_depth", values = c(4,6,8,10)),

makeNumericParam("min_child_weight",lower = 1L,upper = 10L),

makeDiscreteParam("subsample", values = c(0.5, 0.75, 1)),

makeDiscreteParam("colsample_bytree", values = c(0.4, 0.6, 0.8, 1)),

makeNumericParam("gamma",lower = 0L,upper = 7L)

)

# 5. Set resampling strategy

rdesc <- makeResampleDesc("CV",stratify = T,iters=10L)

# 6. Search strategy

ctrl <- makeTuneControlRandom(maxit = 60L, tune.threshold = F)

# Set parallel backend and tune parameters

parallelStartMulticore(cpus = detectCores())

# 7. Parameter tuning

timer <- proc.time()

mytune <- tuneParams(learner = lrn,

task = traintask,

resampling = rdesc,

measures = auc,

par.set = params,

control = ctrl,

show.info = T)

proc.time() - timer

parallelStop

As you can see I distribute the search task among all my CPU cores. The problem is that it has been over 5 days and the task is still running - this is the mlr output for the task (displayed when the task is running):

[Tune] Started tuning learner classif.xgboost for parameter set:

Type len Def Constr Req Tunable Trafo

max_depth discrete - - 4,6,8,10 - TRUE -

min_child_weight numeric - - 1 to 10 - TRUE -

subsample discrete - - 0.5,0.75,1 - TRUE -

colsample_bytree discrete - - 0.4,0.6,0.8,1 - TRUE -

gamma numeric - - 0 to 7 - TRUE -

With control class: TuneControlRandom

Imputation value: -0

Mapping in parallel: mode = multicore; level = mlr.tuneParams; cpus = 16; elements = 60.

I used to run this on my macbook pro laptop and it finished within approximately 8 hours. The laptop was 15-inch 2018 2.6 GHz intel core i7 (6 cores) with 32 GB memory DDR4. Now I run it on a much stronger computer - the only thing that is changed is that this is an Ubuntu OS. The machine I'm having this problem on is a stationary computer with Intel i9-9900K CPU @ 3.60GHz x 16 cores. The desktop is GNOME 3.28.2, OS type is 64-bit and it has 64GB of RAM.

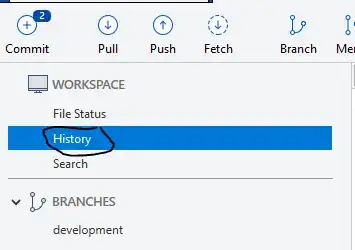

I have attached a screenshot which I took during the running of the mlr searching task - it shows that not all the CPU cores are engaged, something that was the opposite when I ran this on the MacBook Pro laptop.

What is the problem here? Is it something that has to do with the Ubuntu system and its capabilities of parallelization? I have found a somewhat-similar question here but there was no apparent solution there as well.

When I try to run this from the terminal instead of from RStudio, it still seems that the cores are not engaged: