I want to predict continuous variable (autoencoder). As I have scaled my inputs by min-max to 0-1 interval, does it make sense to use sigmoid activation in output layer? Sigmoid does not correspond to MSE loss then. Any ideas?

-

Sigmoid with binary crossentropy is usually a good option. You can always use MSE too, no problem. – Daniel Möller Oct 19 '19 at 13:11

-

binary cross entropy is used for the binary outcome, it has its meaning. However, I want to predict continuous variable, not binary. – pikachu Oct 19 '19 at 13:18

-

I see.... Although in a neural network, everything is continous, of course. Using a binary crossentropy wouldn't be "wrong". It may behave in a different way indeed (see OverLord's answer), but the point of minimum loss will be your desired output, as with other losses. – Daniel Möller Oct 20 '19 at 00:10

2 Answers

SUMMARY: if unsure, go with binary_crossentropy + sigmoid. If most your labels are 0's or 1's, or very close to, try mae + hard_sigmoid.

EXPLANATION:

Loss functions define a model's priorities; for regression, the goal's to minimize deviation of predictions from ground truth (labels). With an activation bounded between 0 and 1, MSE will work.

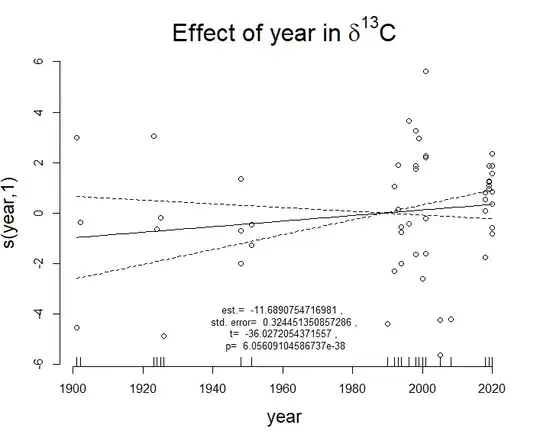

However; it may not be best - in particular, for normalized data. Below is a plot of MSE vs MAE for the [0, 1] interval. Key differences:

- MSE penalizes small differences much less than MAE

- MSE penalizes large differences, w.r.t. its own penalties of small values, much more than MAE

As a consequence of above:

- MSE --> model is better at not being 'very wrong', but worse at being 'very right'

- MAE --> model is better at predicting all values on average, but doesn't mind 'very wrong' predictions

As far as activations go - hard sigmoid may work better, especially if many of your values are equal to or very close to 0 or 1, as it can equal 0 or 1 (or approach them) a lot quicker than sigmoid can, which should serve as a form of regularization, since it is a form of linearization (--> weight decay).

Binary crossentropy: should generally work the best (w/ sigmoid)

In a sense, it's the best of both worlds: it's more 'evenly distributed' (over non-asymptotic interval), and strongly penalizes 'very wrong' predictions. In fact, BCE is much harsher on such predictions than MSE - so you should rarely if ever see a "0" predicted on a "1" label (except in validation). Just be sure not to use hard sigmoid, for self-evident reasons.

Autoencoders: strive to reconstruct their input. Depending on the application, you may:

Need to ensure that no single prediction bears too much significance. Ex: signal data. A single extremely wrong timestep could outweigh an otherwise excellent overall reconstruction

Have noisy data, and prefer a model more robust to noise

Per both above, especially (1), BCE may be undesirable. By treating all labels more 'equally', MAE may work better for (2).

MSE vs. MAE:

Sigmoid vs. Hard Sigmoid

Binary crossentropy vs. MSE vs. MAE (y == 0 case shown for BCE)

- 1

- 9

- 53

- 101

-

No, you cannot blindly recommend binary cross-entropy, its a classification loss, and here the problem is clearly a regression problem (continuous outputs). – Dr. Snoopy Oct 20 '19 at 15:58

-

@MatiasValdenegro It holds for regression "on 0-1 interval", directly quoting the title. – OverLordGoldDragon Oct 22 '19 at 21:17

Use sigmoid activation and a regression loss such as mean_squared_error or mean_absolute_error

- 55,122

- 7

- 121

- 140