I am working on a comparison of the fitting accuracy results for the different types of data quality. A "good data" is the data without any NA in the feature values. A "bad data" is the data with NA in the feature values. A "bad data" should be fixed by some value correction. As a value correction, it might be replacing NA with zero or mean value.

In my code, I am trying to perform multiple fitting procedures.

Review the simplified code:

from keras import backend as K

...

xTrainGood = ... # the good version of the xTrain data

xTrainBad = ... # the bad version of the xTrain data

...

model = Sequential()

model.add(...)

...

historyGood = model.fit(..., xTrainGood, ...) # fitting the model with

# the original data without

# NA, zeroes, or the feature mean values

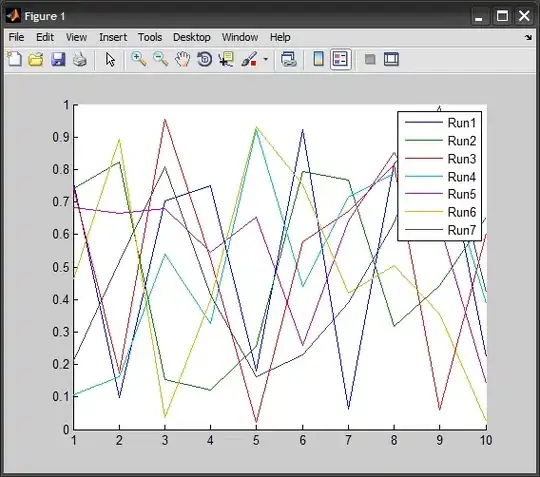

Review the fitting accuracy plot, based on historyGood data:

After that, the code resets a stored the model and re-train the model with the "bad" data:

K.clear_session()

historyBad = model.fit(..., xTrainBad, ...)

Review the fitting process results, based on historyBad data:

As one can notice, the initial accuracy > 0.7, which means the model "remembers" previous fitting.

For the comparison, this is the standalone fitting results of "bad" data:

How to reset the model to the "initial" state?