My company uses Qt3D to display its CAD graphics. For this application it is necessary to implement pixel correct Order-Independent-Transparency. As a first step in order to achieve this goal I tried to write a small app, which renders a simple plane to depth-texture and an rgba-texture.

This is my "small" example app:

main.cpp

#include <QApplication>

#include <Qt3DRender/QTechniqueFilter>

#include <Qt3DCore/QTransform>

#include <Qt3DRender/QRenderSurfaceSelector>

#include <Qt3DRender/QParameter>

#include <Qt3DRender/QViewport>

#include <Qt3DRender/QTextureImage>

#include <Qt3DRender/QCamera>

#include <Qt3DRender/QCameraSelector>

#include <Qt3DRender/QClearBuffers>

#include <Qt3DRender/QTexture>

#include <Qt3DExtras/QPlaneMesh>

#include <Qt3DExtras/QTextureMaterial>

#include <Qt3DExtras/Qt3DWindow>

#include <Qt3DRender/QParameter>

#include <Qt3DRender/QRenderTarget>

#include <Qt3DRender/QRenderTargetOutput>

#include <Qt3DRender/QRenderTargetSelector>

#include <QDebug>

#include "TextureMaterial.h"

namespace {

Qt3DCore::QEntity* createPlane()

{

auto entity = new Qt3DCore::QEntity;

auto mesh = new Qt3DExtras::QPlaneMesh;

mesh->setWidth(0.3);

mesh->setHeight(0.3);

entity->addComponent(mesh);

return entity;

}

}

void main(int argc, char** args) {

QApplication app(argc, args);

auto view = new Qt3DExtras::Qt3DWindow();

auto mClearBuffers = new Qt3DRender::QClearBuffers;

auto mMainCameraSelector = new Qt3DRender::QCameraSelector;

mMainCameraSelector->setCamera(view->camera());

auto mRenderSurfaceSelector = new Qt3DRender::QRenderSurfaceSelector;

auto mMainViewport = new Qt3DRender::QViewport;

mMainViewport->setNormalizedRect(QRectF(0, 0, 1, 1));

mClearBuffers->setClearColor(Qt::lightGray);

mClearBuffers->setBuffers(Qt3DRender::QClearBuffers::BufferType::ColorDepthBuffer);

mClearBuffers->setParent(mMainCameraSelector);

mRenderSurfaceSelector->setParent(mMainViewport);

mMainCameraSelector->setParent(mRenderSurfaceSelector);

auto renderTargetSelector = new Qt3DRender::QRenderTargetSelector(mMainCameraSelector);

auto renderTarget = new Qt3DRender::QRenderTarget(renderTargetSelector);

{ // Depth Texture Output

auto depthTexture = new Qt3DRender::QTexture2D;

depthTexture->setSize(400, 300);

depthTexture->setFormat(Qt3DRender::QAbstractTexture::TextureFormat::DepthFormat);

auto renderTargetOutput = new Qt3DRender::QRenderTargetOutput;

renderTargetOutput->setAttachmentPoint(Qt3DRender::QRenderTargetOutput::AttachmentPoint::Depth);

renderTargetOutput->setTexture(depthTexture);

renderTarget->addOutput(renderTargetOutput);

}

{// Image Texture Output

auto imageTexture = new Qt3DRender::QTexture2D;

imageTexture->setSize(400, 300);

imageTexture->setFormat(Qt3DRender::QAbstractTexture::TextureFormat::RGBA32F);

auto renderTargetOutput = new Qt3DRender::QRenderTargetOutput;

renderTargetOutput->setAttachmentPoint(Qt3DRender::QRenderTargetOutput::AttachmentPoint::Color1);

renderTargetOutput->setTexture(imageTexture);

renderTarget->addOutput(renderTargetOutput);

}

view->setActiveFrameGraph(mMainViewport);

view->activeFrameGraph()->dumpObjectTree();

auto rootEntity = new Qt3DCore::QEntity();

view->setRootEntity(rootEntity);

auto cameraEntity = view->camera();

cameraEntity->lens()->setPerspectiveProjection(45.0f, 1., 0.1f, 10000.0f);

cameraEntity->setPosition(QVector3D(0, 0.3, 0));

cameraEntity->setUpVector(QVector3D(0, 1, 0));

cameraEntity->setViewCenter(QVector3D(0, 0, 0));

{

auto mat = new TextureMaterial;

auto plane = createPlane();

plane->setParent(rootEntity);

auto trans = new Qt3DCore::QTransform;

//trans->setTranslation(QVector3D(0, -2, 0));

trans->setRotation(QQuaternion::fromAxisAndAngle({ 1,0,0 }, 80));

plane->addComponent(trans);

plane->addComponent(mat);

}

view->setWidth(400);

view->setHeight(300);

view->show();

app.exec();

}

TextureMaterial.h

#pragma once

#include <Qt3DRender/QMaterial>

namespace Qt3DRender {

class QTechnique;

class QRenderPass;

class QShaderProgram;

}

class TextureMaterial : public Qt3DRender::QMaterial

{

Q_OBJECT

public:

explicit TextureMaterial(Qt3DCore::QNode* parent = nullptr);

~TextureMaterial();

};

TextureMaterial.cpp

#include "TextureMaterial.h"

#include <Qt3DRender/QMaterial>

#include <Qt3DRender/QEffect>

#include <Qt3DRender/QTechnique>

#include <Qt3DRender/QShaderProgram>

#include <Qt3DRender/QParameter>

#include <Qt3DRender/QGraphicsApiFilter>

#include <Qt3DRender/QAbstractTexture>

#include <QUrl>

TextureMaterial::TextureMaterial(Qt3DCore::QNode* parent) : QMaterial(parent)

{

auto colorParm = new Qt3DRender::QParameter(QStringLiteral("diffuseColor"), QColor::fromRgbF(0.0,1.0,0.1,0.8)); // Green!!!

auto shaderProgram = new Qt3DRender::QShaderProgram;

shaderProgram->setVertexShaderCode(Qt3DRender::QShaderProgram::loadSource(QUrl(QStringLiteral("qrc:/shader.vert"))));

shaderProgram->setFragmentShaderCode(Qt3DRender::QShaderProgram::loadSource(QUrl(QStringLiteral("qrc:/shader.frag"))));

auto technique = new Qt3DRender::QTechnique;

technique->graphicsApiFilter()->setApi(Qt3DRender::QGraphicsApiFilter::OpenGL);

technique->graphicsApiFilter()->setMajorVersion(3);

technique->graphicsApiFilter()->setMinorVersion(1);

technique->graphicsApiFilter()->setProfile(Qt3DRender::QGraphicsApiFilter::CoreProfile);

auto renderPass = new Qt3DRender::QRenderPass;

renderPass->setShaderProgram(shaderProgram);

technique->addRenderPass(renderPass);

auto effect = new Qt3DRender::QEffect;

effect->addTechnique(technique);

effect->addParameter(colorParm);

setEffect(effect);

}

TextureMaterial::~TextureMaterial()

{

}

shader.frag

#version 150 core

uniform vec4 diffuseColor;

in vec3 position;

out vec4 blendedColor;

out float depth;

void main()

{

depth=gl_FragCoord.z;

blendedColor=diffuseColor;

}

shader.vert

#version 150 core

in vec3 vertexPosition;

out vec3 position;

uniform mat4 modelView;

uniform mat4 mvp;

void main()

{

gl_Position = mvp * vec4( vertexPosition, 1.0 );

}

files.qrc

<!DOCTYPE RCC><RCC version="1.0">

<qresource prefix="/">

<file>shader.frag</file>

<file>shader.vert</file>

</qresource>

</RCC>

Since, all outputs of the fragment shader should be rendered to this two textures I expected to see nothing inside myQt3dWindow.

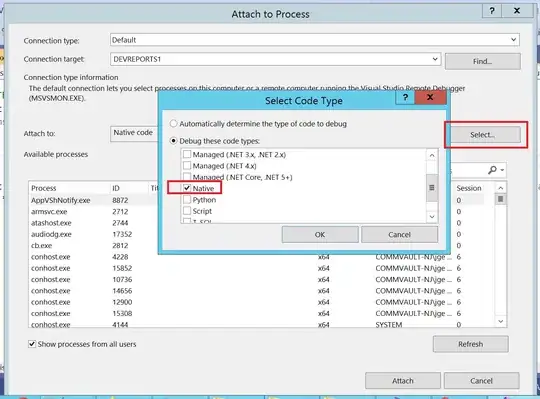

Surprisingly, there is some output, which is dependent on the order of defines of blendedColor and depth in my fragment shader.

If depth is defined first I'll see the depth buffer and if blendedColor goes first, I'll see the diffuseColor.

Here is what I'll see:

Basically, I have two questions:

- Why can I see the

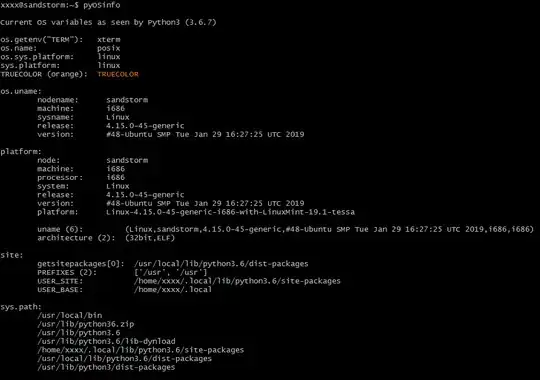

depthandblendedColorin my viewer, if my render target is texture? - How can I inspect, what is being rendered in the textures

depthTextureandimageTexture?- Is there some way to save these textures as images, for example?