Trying to implement bidirectional LSTM model with glove embedding in Python using keras. Model architecture is as below:

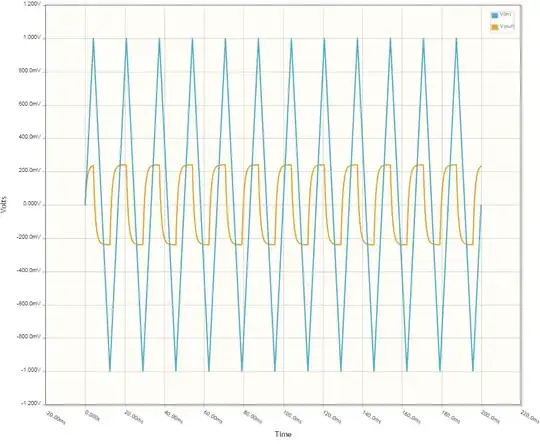

The model works fine when it is run without any preprocessing of input data. Below image shows the output of the model:

As a part of pre-processing, the input data is lemmatized using Spacy and then passed into the model.

Lemmatization Pre-processing code:

nlp = spacy.load(“en_core_web_sm-2.1.0”)

doc = nlp(sentence)

lemma_sent = “ “.join([token.lemma_ for token in doc])

Below method does the model fit:

model.fit(data_train,train_label,epoch=5,batch_size=32,verbose=True,validation_data=[data_test,test_label])

But the model is giving output as below for each epoch(after lemmatization of train and test data):

Please help on why the model runs like above with lemmatization. Model summary looks same for both with and without lemmatization.