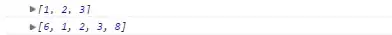

I have big table of data that I read from excel in Python where I perform some calculation my dataframe looks like this but my true table is bigger and more complex but the logic stays the same:

with : My_cal_spread=set1+set2 and Errors = abs( My_cal_spread - spread)

My goal is to find using Scipy Minimize to the only same combination of (Set1 and Set 2) that can be used in each row so My_cal_spread is as close as possible to Spread by optimizing in finding the minimum sum of errors Possible.

this is the solution that I get when I am using excel solver, I'm looking for implementing the same solution using Scipy. Thanks

My code looks like this :

lnt=len(df['Spread'])

df['my_cal_Spread']=''

i=0

while i<lnt:

df['my_cal_Spread'].iloc[i]=df['set2'].iloc[i]+df['set1'].iloc[i]

df['errors'].iloc[i] = abs(df['my_cal_Spread'].iloc[i]-df['Spread'].iloc[i])

i=i+1

errors_sum=sum(df['errors'])