I am trying to backup and restore rancher server (single node install), as the described here.

After backup, I tried to turn off the rancher server node, and I run a new rancher container on a new node (in the same network, but another ip address), then I restored using the backup file.

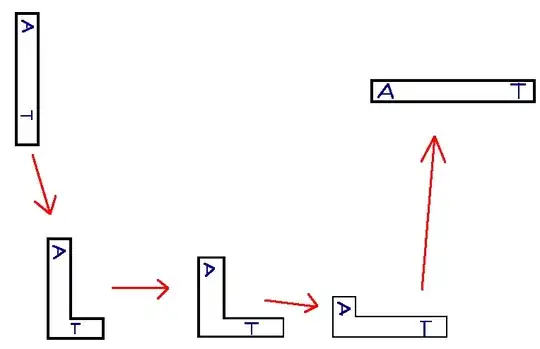

After restoring, I logged in to the rancher UI and it showed the error below:

So, I checked the logs of the rancher server and it showed as below:

2019-10-05 16:41:32.197641 I | http: TLS handshake error from 127.0.0.1:38388: EOF 2019-10-05 16:41:32.202442 I | http: TLS handshake error from 127.0.0.1:38380: EOF 2019-10-05 16:41:32.210378 I | http: TLS handshake error from 127.0.0.1:38376: EOF 2019-10-05 16:41:32.211106 I | http: TLS handshake error from 127.0.0.1:38386: EOF 2019/10/05 16:42:26 [ERROR] ClusterController c-4pgjl [user-controllers-controller] failed with : failed to start user controllers for cluster c-4pgjl: failed to contact server: Get https://192.168.94.154:6443/api/v1/namespaces/kube-system?timeout=30s: waiting for cluster agent to connect 2019/10/05 16:44:34 [ERROR] ClusterController c-4pgjl [user-controllers-controller] failed with : failed to start user controllers for cluster c-4pgjl: failed to contact server: Get https://192.168.94.154:6443/api/v1/namespaces/kube-system?timeout=30s: waiting for cluster agent to connect 2019/10/05 16:48:50 [ERROR] ClusterController c-4pgjl [user-controllers-controller] failed with : failed to start user controllers for cluster c-4pgjl: failed to contact server: Get https://192.168.94.154:6443/api/v1/namespaces/kube-system?timeout=30s: waiting for cluster agent to connect 2019-10-05 16:50:19.114475 I | mvcc: store.index: compact 75951 2019-10-05 16:50:19.137825 I | mvcc: finished scheduled compaction at 75951 (took 22.527694ms) 2019-10-05 16:55:19.120803 I | mvcc: store.index: compact 76282 2019-10-05 16:55:19.124813 I | mvcc: finished scheduled compaction at 76282 (took 2.746382ms)

After that, I checked logs of the master nodes, I found that the rancher agent still tries to connect to the old rancher server (old ip address), not as the new one, so it makes the cluster not available.

How can I fix this?