I have a function (API) on AWS Lambda that connects to DynamoDB as the data store. One particular endpoint is infrequent use but takes relatively large data sets and does several things with them pushing data to DynamoDB (it's essentially a project setup step). In one test case it makes about 1800 items between 2 tables and pushes them along with 2 or three individual items to other tables. However, I'm getting a gateway timeout response when I do this. If I reduce the size so it's only around 800-1000 items it seems to be good, but much bigger and I get gateway timeout.

Now the strange part is that I have upped the timing for the Lambda function to 90 seconds but it gives me a 504 back sooner than that. If I look in the CloudWatch logs it says timed out in around 90 seconds (90,000ms) but I started a stopwatch when I started the upload and stopped it when I got the 504 and it was about 32 seconds...

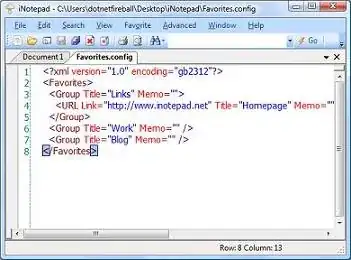

Here is the Lambda configuration:

And the logs from Lambda:

And the Chrome devtools timing from the client that made the request (same request as the logs):

How else would I handle this? Is there a way to tell the timeout to be really high for this endpoint but not others? Most important, why is it returning before the specified timeout but reporting full time was taken? What am I not understanding here?