I would like to design a neural network for a multi-task deep learning task. Within the Keras API we can either use the "Sequential" or "Functional" approach to build such a neural network. Underneath I provide the code I used to build a network using both approaches to build a network with two outputs:

Sequential

seq_model = Sequential()

seq_model.add(LSTM(32, input_shape=(10,2)))

seq_model.add(Dense(8))

seq_model.add(Dense(2))

seq_model.summary()

Functional

input1 = Input(shape=(10,2))

lay1 = LSTM(32, input_shape=(10,2))(input1)

lay2 = Dense(8)(lay1)

out1 = Dense(1)(lay2)

out2 = Dense(1)(lay2)

func_model = Model(inputs=input1, outputs=[out1, out2])

func_model.summary()

When I look at both the summary outputs for the models, each of them contains identical number of trainable params:

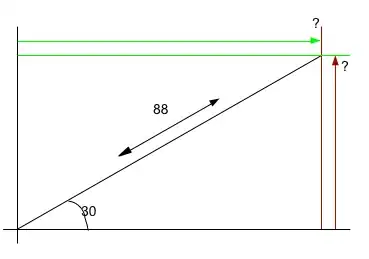

Up until now, this looks fine - however I start doubting myself when I plot both models (using keras.utils.plot_model) which results in the followings graphs:

Personally I do not know how to interpret these. When using a multi-task learning approach, I want all neurons (in my case 8) of the layer before the output-layer to connect to both output neurons. For me this clearly shows in the Functional API (where I have two Dense(1) instances), but this is not very clear from the Sequential API. Nevertheless, the amount of trainable params is identical; suggesting that also the Sequential API the last layer is fully connected to both neurons in the Dense output layer.

Could anybody explain to me the differences between those two examples, or are those fully identical and result in the same neural network architecture? Also, which one would be preferred in this case?

Thank you a lot in advance.