We are currently using GL_SRGB8_ALPHA8 for FBO color correction but it causes significant color banding in darker scenes.

Is there a version of GL_SRGB8_ALPHA8 that has 10 bit per color channel (e.g. GL_RGB10_A2)? If not, what workarounds are there for this use case?

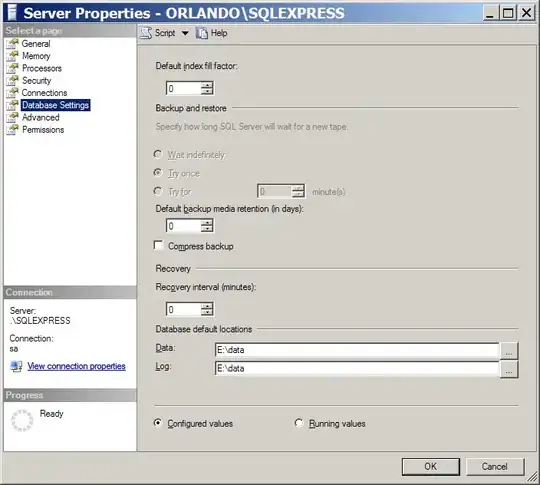

The attached image has been added contrast to make it more visible but it's still noticeable in the source as well.