I have a video processing application that moves a lot of data.

To speed things up, I have made a lookup table, as many calculations in essence only need to be calculated one time and can be reused.

However I'm at the point where all the lookups now takes 30% of the processing time. I'm wondering if it might be slow RAM.. However, I would still like to try to optimize it some more.

Currently I have the following:

public readonly int[] largeArray = new int[3000*2000];

public readonly int[] lookUp = new int[width*height];

I then perform a lookup with a pointer p (which is equivalent to width * y + x) to fetch the result.

int[] newResults = new int[width*height];

int p = 0;

for (int y = 0; y < height; y++) {

for (int x = 0; x < width; x++, p++) {

newResults[p] = largeArray[lookUp[p]];

}

}

Note that I cannot do an entire array copy to optimize. Also, the application is heavily multithreaded.

Some progress was in shortening the function stack, so no getters but a straight retrieval from a readonly array.

I've tried converting to ushort as well, but it seemed to be slower (as I understand it's due to word size).

Would an IntPtr be faster? How would I go about that?

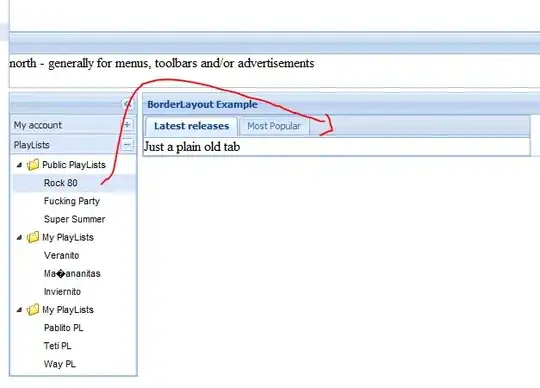

Attached below is a screenshot of time distribution: