The exponential function does not fit your data well. Consider another modeling function.

Given

import numpy as np

import scipy.optimize as opt

import matplotlib.pyplot as plt

%matplotlib inline

x_samp = np.array([7e-09, 9e-09, 1e-08, 2e-8, 1e-6])

y_samp = np.array([790, 870, 2400, 2450, 3100])

def func(x, a, b):

"""Return a exponential result."""

return a + b*np.log(x)

def func2(x, a, b, c):

"""Return a 'power law' result."""

return a/np.power(x, b) + c

Code

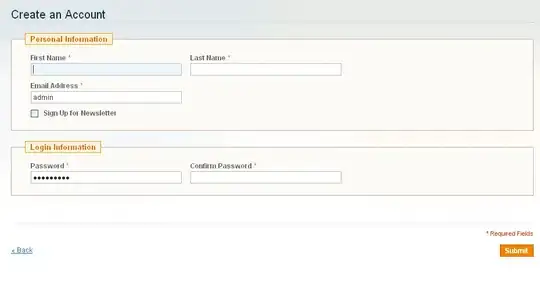

From @Allan Lago's logarithmic model:

# REGRESSION ------------------------------------------------------------------

x_lin = np.linspace(x_samp.min(), x_samp.max(), 50)

w, _ = opt.curve_fit(func, x_samp, y_samp)

print("Estimated Parameters", w)

# Model

y_model = func(x_lin, *w)

# PLOT ------------------------------------------------------------------------

# Visualize data and fitted curves

plt.plot(x_samp, y_samp, "ko", label="Data")

plt.plot(x_lin, y_model, "k--", label="Fit")

plt.xticks(np.arange(0, x_samp.max(), x_samp.max()/2))

plt.title("Least squares regression")

plt.legend(loc="upper left")

Estimated Parameters [8339.61062739 367.6992259 ]

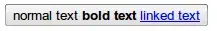

Using @James Phillips' "Polytrope" model:

# REGRESSION ------------------------------------------------------------------

p0 = [1, 1, 1]

w, _ = opt.curve_fit(func2, x_samp, y_samp, p0=p0)

print("Estimated Parameters", w)

# Model

y_model = func2(x_lin, *w)

# PLOT ------------------------------------------------------------------------

# Visualize data and fitted curves

plt.plot(x_samp, y_samp, "ko", label="Data")

plt.plot(x_lin, y_model, "k--", label="Fit")

plt.xticks(np.arange(0, x_samp.max(), x_samp.max()/2))

plt.title("Least squares regression")

plt.legend()

Estimated Parameters [-3.49305043e-10 1.57259788e+00 3.05801283e+03]