Our company is in need to capture the rendering of a Qt3d scene. For this I created a small example application, that illustrates the usage of our capturing.

On the left-hand side you will find the 3D scene and on the right-hand side there is a QLabel with a QPixmap showing the captured screen.

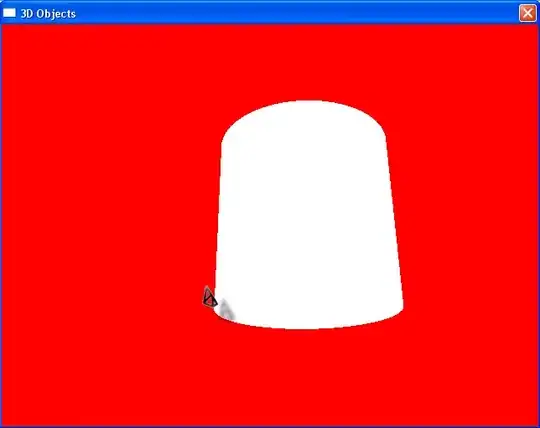

Now, for some reason I really don't understand the captured screenshot really looks different compared to the 3D scene on the left hand side. Even more confusing the saved PNG images looks different compared to QLabel on the right hand side, but actually I'm more interested in the PNG for my use case.

It saves me the following PNG file:

I already tried to insert the QRenderCapture in different place of the frame graph, but none of the places gave reasonable results.

This is my frameGraph->dumpObjectTree output:

Qt3DExtras::QForwardRenderer::

Qt3DRender::QRenderSurfaceSelector::

Qt3DRender::QViewport::

Qt3DRender::QCameraSelector::

Qt3DRender::QClearBuffers::

Qt3DRender::QFrustumCulling::

Qt3DRender::QCamera::

Qt3DRender::QCameraLens::

Qt3DCore::QTransform::

Qt3DRender::QRenderCapture:: // Insertion of QRenderCapture

Qt3DRender::QFilterKey::

It seems, that I capture the 3d scene somewhere during the rendering, so that there might be some timing issues. (Maybe the usage of QEventLoop is not admissible in this use case, but I really don't know why.)

The documentation of QRenderCapture implies, that QRenderCapture should be the last leafnode of the frame graph, but this is what I did.

From QRenderCapture documentation:

The QRenderCapture is used to capture rendering into an image at any render stage. Capturing must be initiated by the user and one image is returned per capture request. User can issue multiple render capture requests simultaneously, but only one request is served per QRenderCapture instance per frame.

And also:

Used to request render capture. Only one render capture result is produced per requestCapture call even if the frame graph has multiple leaf nodes. The function returns a QRenderCaptureReply object, which receives the captured image when it is done. The user is responsible for deallocating the returned object.

Can someone here help me out?

#include <QApplication>

#include <QVBoxLayout>

#include <QLabel>

#include <QPushButton>

#include <Qt3DRender/QRenderCapture>

#include <Qt3DRender/QCamera>

#include <Qt3DExtras/QSphereMesh>

#include <Qt3DExtras/QDiffuseSpecularMaterial>

#include <Qt3DExtras/QForwardRenderer>

#include <Qt3DExtras/Qt3DWindow>

Qt3DCore::QEntity* transparentSphereEntity() {

auto entity = new Qt3DCore::QEntity;

auto meshMaterial = new Qt3DExtras::QDiffuseSpecularMaterial();

meshMaterial->setAlphaBlendingEnabled(true);

meshMaterial->setDiffuse(QColor(255, 0, 0, 50));

auto mesh = new Qt3DExtras::QSphereMesh();

mesh->setRadius(1.0);

entity->addComponent(mesh);

entity->addComponent(meshMaterial);

return entity;

}

int main(int argc, char* argv[])

{

QApplication a(argc, argv);

auto frame = new QFrame;

auto view = new Qt3DExtras::Qt3DWindow();

auto camera = new Qt3DRender::QCamera;

camera->lens()->setPerspectiveProjection(45.0f, 1., 0.1f, 10000.0f);

camera->setPosition(QVector3D(0, 0, 10));

camera->setUpVector(QVector3D(0, 1, 0));

camera->setViewCenter(QVector3D(0, 0, 0));

view->defaultFrameGraph()->setCamera(camera);

auto frameGraph = view->defaultFrameGraph();

frameGraph->dumpObjectTree();

auto camSelector = frameGraph->findChild<Qt3DRender::QCamera*>();

auto renderCapture = new Qt3DRender::QRenderCapture(camSelector);

frameGraph->dumpObjectTree();

auto rootEntity = new Qt3DCore::QEntity();

view->setRootEntity(rootEntity);

auto sphere = transparentSphereEntity();

sphere->setParent(rootEntity);

auto btnScreenshot = new QPushButton("Take Screenshot");

auto labelPixmap = new QLabel;

frame->setLayout(new QHBoxLayout);

frame->layout()->addWidget(QWidget::createWindowContainer(view));

frame->layout()->addWidget(btnScreenshot);

frame->layout()->addWidget(labelPixmap);

frame->setMinimumSize(1000, 1000 / 3);

frame->show();

QObject::connect(btnScreenshot, &QPushButton::clicked, [&]() {

QEventLoop loop;

auto reply = renderCapture->requestCapture();

QObject::connect(reply, &Qt3DRender::QRenderCaptureReply::completed, [&] {

reply->image().save("./data/test.png");

labelPixmap->setPixmap(QPixmap::fromImage(reply->image()));

loop.quit();

});

loop.exec();

});

return a.exec();

}