I am working on a neural network in Tensorflow. My network performs multilabel classification to predict which users bid on which cars. I have a CSV with three columns; User IDs, Highest bid, and Make names (car models), however I only use User IDs and Make names. User IDs are the input and Make names are the labels, where 86 unique Make names exist. My problem is that when I train my model with the dataset, my loss is decreasing very slowly and accuracy is almost not changing at all.

I have tried changing the amount of layers I am using, as well as trying different amounts of neurons in each hidden layer, however I did not have any luck with that.

from __future__ import absolute_import, division, print_function, unicode_literals

import os

import matplotlib.pyplot as plt

import tensorflow as tf

import pandas as pd

import numpy as np

#

# READ FILE AND SET FEATURE & LABEL

#

raw_train_data = pd.read_csv("data2016NEW.csv", usecols=["USERID"])

#raw_test_data = pd.read_csv("data2017.csv", usecols=["USERID"])

train_target = pd.read_csv("data2016NEW.csv", usecols=["MAKENAME"])

#test_target = pd.read_csv("data2017.csv", usecols=["MAKENAME"])

train_dataset = tf.data.Dataset.from_tensor_slices((raw_train_data.values, train_target.values))

#test_dataset = tf.data.Dataset.from_tensor_slices((raw_test_data.values, test_target.values))

train_dataset_shuffled_batched = train_dataset.shuffle(len(raw_train_data)).batch(512)

#test_dataset_shuffled_batched = test_dataset.shuffle(len(raw_test_data)).batch(512)

def get_compiled_model():

model = tf.keras.Sequential([

#input layer

tf.keras.layers.Dropout(0.2, input_shape=raw_train_data.shape[1:]),

#hidden layers

tf.keras.layers.Dense(360, activation='relu'),

tf.keras.layers.Dense(360, activation='relu'),

tf.keras.layers.Dense(360, activation='relu'),

tf.keras.layers.Dense(360, activation='relu'),

tf.keras.layers.Dense(360, activation='relu'),

tf.keras.layers.Dense(360, activation='relu'),

#output layer

tf.keras.layers.Dense(90, activation='softmax')

])

model.compile(

optimizer=tf.keras.optimizers.Adam(learning_rate=0.01),

loss=tf.keras.losses.sparse_categorical_crossentropy,

metrics=['accuracy']

)

return model

model = get_compiled_model()

model.summary()

history = model.fit(train_dataset_shuffled_batched, epochs=1000, verbose=2)

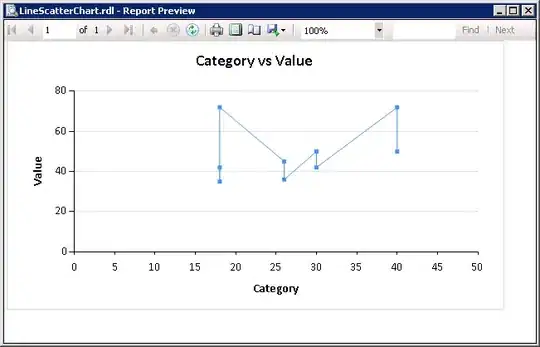

Here is an image that shows a small sample of my data (my dataset is around 160000 rows):

As you can see, User IDs are normalized between 0 and 1, while the label Make name are integers.

Appreciate all the help, cheers!