I'm trying to use arUco marker detection in openCV to calculate the angle of a marker in comparison to the camera's plain.

what's important to me is what angle the marker is rotated in accordance to the camera X-Y plain.

EDIT: changed the pictures, now from my code, including axis for markers and calculated angle.

Picture A:

Picture B:

Picture B:

Picture C:

Picture C:

Picture D:

Picture D:

picture A has the marker in front of the camera, and facing the camera.

picture B has the marker offset from the center of the camera, but still facing towards the camera's plain (so yaw angle should be 0 deg)

picture C has the marker rotated in 45 deg, and directly in front of the camera

picture D has the marker rotated in 45 deg angle, and offset from the center of the camera.

picture A has the marker in front of the camera, and facing the camera.

picture B has the marker offset from the center of the camera, but still facing towards the camera's plain (so yaw angle should be 0 deg)

picture C has the marker rotated in 45 deg, and directly in front of the camera

picture D has the marker rotated in 45 deg angle, and offset from the center of the camera.

The end calculation has to result in a yaw angle of 0 degrees in pictures A and B, and 45 degrees in pictures C and D

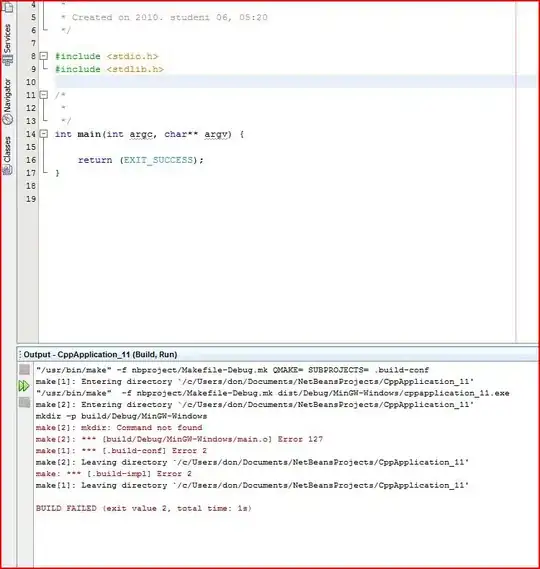

for that purposes I tried the following code:

corners, ids, rejectedImgPoints = aruco.detectMarkers(gray, aruco_dict)

rvec, tvec, x = aruco.estimatePoseSingleMarkers(corners, 0.04, cameraMatrix, distCoeffs)

rmat, jacobian = cv2.Rodrigues(rvec)

world_angle = acos(rmat[0][0]) * 57.296

I appear to get the wrong angle. when I recreate the scenes in pictures A and B I get an angle of 20 degrees, and it stays wrong until around a 30 degrees of yaw. But on the other hand, for yaw angles > 45 degrees I get a correct answer.

Am I doing the calculation correctly? or is there some bug in the code? Thanks.