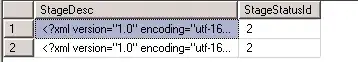

I allocated resource to 1 pod only with 650MB/30% of memory (with other built-in pods, limit memory is 69% only)

However, when the pod handling process, the usage of pod is within 650MB but overall usage of node is 94%.

Why does it happen because it supposed to have upper limit of 69%? Is it due to other built-in pods which did not set limit? How to prevent this as sometimes my pod with error if usage of Memory > 100%?

My allocation setting (kubectl describe nodes):

Memory usage of Kubernetes Node and Pod when idle:

kubectl top nodes

kubectl top pods

Memory usage of Kubernetes Node and Pod when running task:

kubectl top nodes

kubectl top pods

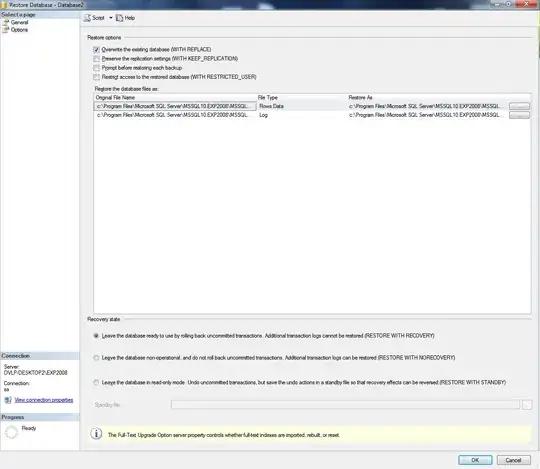

Further Tested behaviour:

1. Prepare deployment, pods and service under namespace test-ns

2. Since only kube-system and test-ns have pods, so assign 1000Mi to each of them (from kubectl describe nodes) aimed to less than 2GB

3. Suppose memory used in kube-system and test-ns will be less than 2GB which is less than 100%, why memory usage can be 106%?

In .yaml file:

apiVersion: v1

kind: LimitRange

metadata:

name: default-mem-limit

namespace: test-ns

spec:

limits:

- default:

memory: 1000Mi

type: Container

---

apiVersion: v1

kind: LimitRange

metadata:

name: default-mem-limit

namespace: kube-system

spec:

limits:

- default:

memory: 1000Mi

type: Container

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: devops-deployment

namespace: test-ns

labels:

app: devops-pdf

spec:

selector:

matchLabels:

app: devops-pdf

replicas: 2

template:

metadata:

labels:

app: devops-pdf

spec:

containers:

- name: devops-pdf

image: dev.azurecr.io/devops-pdf:latest

imagePullPolicy: Always

ports:

- containerPort: 3000

resources:

requests:

cpu: 600m

memory: 500Mi

limits:

cpu: 600m

memory: 500Mi

imagePullSecrets:

- name: regcred

---

apiVersion: v1

kind: Service

metadata:

name: devops-pdf

namespace: test-ns

spec:

type: LoadBalancer

ports:

- port: 8007

selector:

app: devops-pdf