I did multiple imputation using Amelia using the following code

binary<- c("Gender", "Diabetes")

exclude.from.IMPUTATION<-c( "Serial.ID")

NPvars<- c("age", "HDEF","BMI")#a skewed (non-parametric variable

a.out <- Amelia::amelia(x = for.imp.data,m=10,

idvars=exclude.from.IMPUTATION,

noms = binary, logs =NPvars)

summary(a.out)

## save imputed datasets ##

Amelia::write.amelia(obj=a.out, file.stem = "impdata", format = "csv")

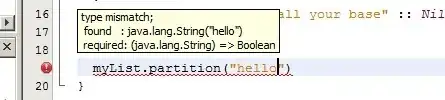

I had 10 different output data csv files (shown in the picture below)

and I know that I can use any one of them to do descriptive analysis as shown in prior questions but

Why we should do MULTIPLE imputation if we will use any SINGLE file of them?

Some authors reported using Rubin's Rule to summarize across imputations as shown here, please advice on how to do that.