We are making use of Azure Functions (v2) extensively to fulfill a number of business requirements.

We have recently introduced a durable function to handle a more complex business process which includes both fanning out, as well as a chain of functions.

Our problem is related to how much the storage account is being used. I made a fresh deployment on an account we use for dev testing on Friday, and left the function idling over the weekend to monitor what happens. I also set a budget to alert me if the cost start shooting up.

Less than 48 hours later, I received an alert that I was at 80% of my budget, and saw how the storage account was single handedly responsible for the entire bill. The most baffling part is, that it's mostly egress and ingress on file storage, which I'm entirely not using in the application! So it must be something internal by the azure function implementations. I've dug around and found this. In this case the issue seems to have been solved by switching to an App Service plan, but this is not an option in our case and must stick to consumption. I also double checked and made sure that I don't have the AzureWebJobsDashboard setting.

Any ideas what we can try next?

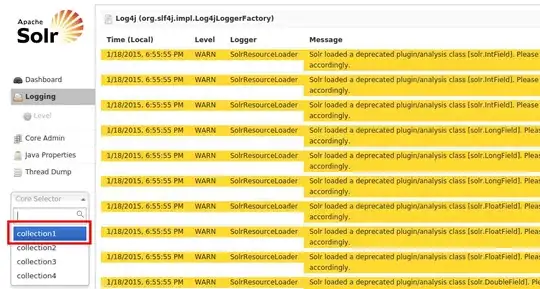

The below are some interesting charts from the storage account. Note how file egress and ingress makes up most of the activity on the entire account.

A ticket for this issue has also been opened on GitHub