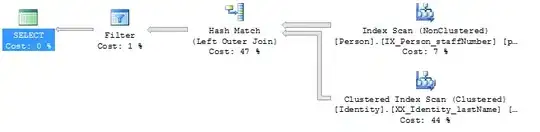

I'm rendering a geometry in WebGL and am getting different results on Chrome for Android (unwanted artifacts, left) and Chrome for Windows (right):

I've tried:

- using WebGL2 and WebGL contexts

- using

gl.UNSIGNED_INTandgl.UNSIGNED_SHORTwhen passing the index buffer - rounding all attribute values to 4 decimals after the comma

Here's some of the code:

I've "dumbed down" my vertex shader to narrow down the issue:

#version 100

precision mediump float;

attribute vec3 aPosition;

attribute vec3 aColor;

attribute vec3 aNormal;

varying vec3 vColor;

varying vec3 vNormal;

varying vec3 vPosition;

varying mat4 vView;

uniform mat4 uWorld;

uniform mat4 uView;

uniform mat4 uProjection;

uniform mat3 uNormal;

uniform float uTime;

void main() {

vColor = aColor;

vNormal = uNormal * aNormal;

vPosition = (uWorld * vec4(aPosition, 1.0)).xyz;

gl_Position = uProjection * uView * uWorld * vec4(aPosition, 1.0);

}

I'm passing the attributes via an interleaved buffer (all values rounded to four decimals after the comma):

gl.bindBuffer(gl.ARRAY_BUFFER, this.interleaved.buffer)

const bytesPerElement = 4

gl.vertexAttribPointer(this.interleaved.attribLocation.position, 3, gl.FLOAT, gl.FALSE, bytesPerElement * 9, bytesPerElement * 0)

gl.vertexAttribPointer(this.interleaved.attribLocation.normal, 3, gl.FLOAT, gl.FALSE, bytesPerElement * 9, bytesPerElement * 3)

gl.vertexAttribPointer(this.interleaved.attribLocation.color, 3, gl.FLOAT, gl.FALSE, bytesPerElement * 9, bytesPerElement * 6)

I'm using an index buffer to draw the geometry:

gl.drawElements(gl.TRIANGLES, this.indices.length, gl.UNSIGNED_INT, 0)

The indices range from 0..3599, hence gl.UNSIGNED_INT should be large enough.

I'm not getting any error messages. On Windows everything renders fine, just Chrome on Android has artifacts.