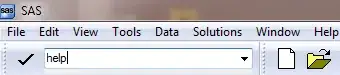

Consider following data:

EventDate,Value

1.1.2019,11

1.2.2019,5

1.3.2019,6

1.4.2019,-15

1.5.2019,-20

1.6.2019,-30

1.7.2019,12

1.8.2019,20

I want to create groups of when these values are within thresholds:

1. > 10

2. <=10 >=-10

3. >-10

The result should be with start and end of values in a certain state:

1.1.2019, 1.1.2019, [11]

1.2.2019, 1.3.2019, [5, 6]

1.4.2019, 1.6.2019, [-15, -20, -30]

1.7.2019, 1.8.2018, [12, 20]

I believe the answer is within the window function, but I am fairly new to databricks and I can't understand how to use it (yet).

Here is a working (python) solution based on looping through the dataframe as a list, however I would prefer a solution that works directly on the dataframe for performance.

from pyspark.sql.functions import *

import pandas as pd

STATETHRESHOLDCHARGE = 10

list = [{"eventDateTime":x["EventDate"], "value":x["Value"]} for x in dataframe.sort(dfArrayOneCast.EventDate).rdd.collect()]

cycles = []

previous = None

for row in list:

currentState = 'charge'

if row["value"] < STATETHRESHOLDCHARGE and row["value"] > (STATETHRESHOLDCHARGE * -1):

currentState = 'idle'

if row["value"] <= (STATETHRESHOLDCHARGE * -1):

currentState = 'discharge'

eventDateTime = row["eventDateTime"]

if previous is None or previous["state"] != currentState:

previous = {"start":row["eventDateTime"], "end":row["eventDateTime"], "values":[row["value"]], "timestamps":[row["eventDateTime"]], "state":currentState}

cycles.append(previous)

else:

previous["end"] = row["eventDateTime"]

previous["values"].append(row["value"])

previous["timestamps"].append(row["eventDateTime"])

display(cycles)