I am working in a program that concerns the optimization of some objective function obj over the scalar beta. The true global minimum beta0 is set at beta0=1.

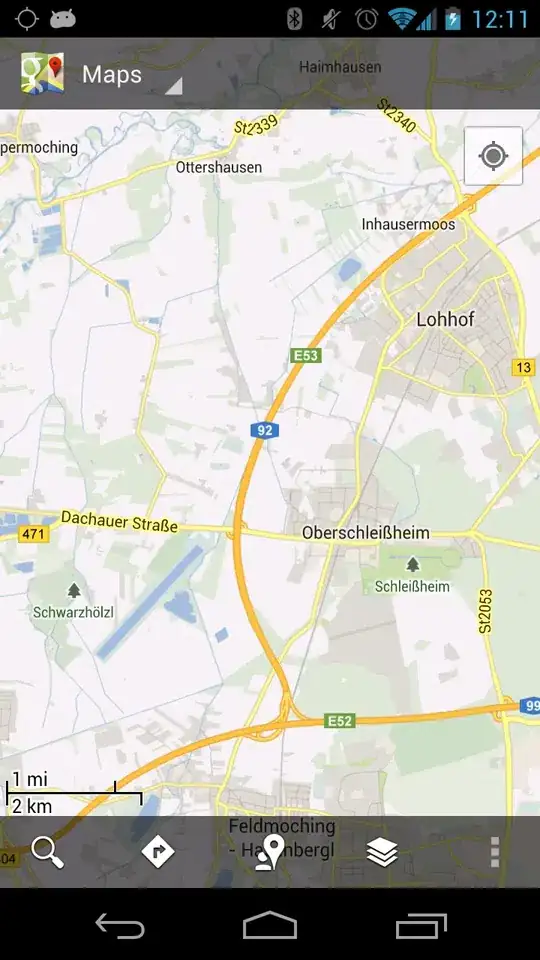

In the mwe below you can see that obj is constructed as the sum of the 100-R (here I use R=3) smallest eigenvalues of the 100x100 symmetric matrix u'*u. While around the true global minimum obj "looks good" when I plot the objective function evaluated at much larger values of beta the objective function becomes very unstable (here or running the mwe you can see that multiple local minima (and maxima) appear, associated with values of obj(beta) smaller than the true global minimum).

My guess is that there is some sort of "numerical instability" going on, but I am unable to find the source.

%Matrix dimensions

N=100;

T=100;

%Reproducibility

rng('default');

%True global minimum

beta0=1;

%Generating data

l=1+randn(N,2);

s=randn(T+1,2);

la=1+randn(N,2);

X(1,:,:)=1+(3*l+la)*(3*s(1:T,:)+s(2:T+1,:))';

s=s(1:T,:);

a=(randn(N,T));

Y=beta0*squeeze(X(1,:,:))+l*s'+a;

%Give "beta" a large value

beta=1e6;

%Compute objective function

u=Y-beta*squeeze(X(1,:,:));

ev=sort(eig(u'*u)); % sort eigenvalues

obj=sum(ev(1:100-3))/(N*T); % "obj" is sum of 97 smallest eigenvalues

This evaluates the objective function at obj(beta=1e6). I have noticed that some of the eigenvalues from eig(u'*u) are negative (see object ev), when by construction the matrix u'*u is positive semidefinite

I am guessing this may have to do with floating point arithmetic issues and may (partly) be the answer to the instability of my function, but I am not sure.

Finally, this is what the objective function obj evaluated at a wide range of values for betalooks like:

% Now plot "obj" for a wide range of values of "beta"

clear obj

betaGrid=-5e5:100:5e5;

for i=1:length(betaGrid)

u=Y-betaGrid(i)*squeeze(X(1,:,:));

ev=sort(eig(u'*u));

obj(i)=sum(ev(1:100-3))/(N*T);

end

plot(betaGrid,obj,"*")

xlabel('\beta')

ylabel('obj')

This gives this figure, which shows how unstable it becomes for extreme values for beta.