There are few things you have to consider:

1 - HDBSCAN is a noise aware clustering algorithm. So the -1 results in the output are data considered as outliers and excluded from clustering.

From

Documentation

Importantly HDBSCAN is noise aware – it has a notion of data samples

that are not assigned to any cluster. This is handled by assigning

these samples the label -1

2 - The dataset is very small and the min_samples and min_cluster_size parameters are not set. So HDBSCAN is using the default parameters which set a minimum cluster size to 5. You can check the used parameters in the output of the clusterer.fit(distance_matrix) command.

HDBSCAN(algorithm='best', allow_single_cluster=False, alpha=1.0,

approx_min_span_tree=True, cluster_selection_method='eom',

core_dist_n_jobs=4, gen_min_span_tree=False, leaf_size=40,

match_reference_implementation=False, memory=Memory(location=None),

metric='precomputed', min_cluster_size=5, min_samples=None, p=None,

prediction_data=False)

Please refer to documentation(Parameter Selection for HDBSCAN) for understanding how to properly configure the algorithm.

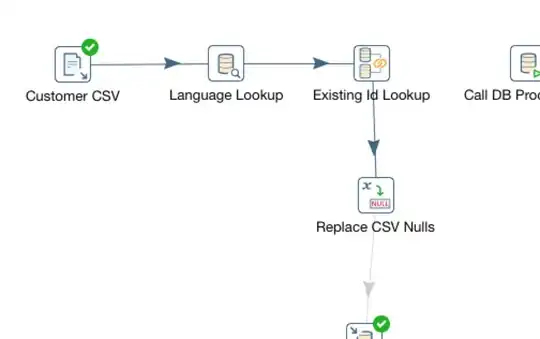

Here it is a corrected version of your code with the plot of the cluster dendrogram.

point_coord=[[0,0],[1,1],[0,1],[50,40],[50,45],[2,3],[1,2]]

distance_matrix=pairwise_distances(point_coord)

clusterer= hdbscan.HDBSCAN(metric='precomputed', min_samples=1,min_cluster_size=2)

clusterer.fit(distance_matrix)

print(clusterer.labels_)

clusterer.single_linkage_tree_.plot()

Output: