I found the next useful example, on this source and adapted for your purpose:

select 23 as weeknumber,

date_format(date_sub(from_unixtime(unix_timestamp('2018-12-29','yyyy-MM-dd')+(23*7*24*60*60)),pmod(datediff(from_unixtime(unix_timestamp('2018-12-29','yyyy-MM-dd')+(23*7*24*60*60)),'1900-01-07'),7)),"MMMMM dd,yyyy") as startday,

date_format(date_add(from_unixtime(unix_timestamp('2018-12-29','yyyy-MM-dd')+(23*7*24*60*60)),6 - pmod(datediff(from_unixtime(unix_timestamp('2018-12-29','yyyy-MM-dd')+(23*7*24*60*60)),"1900-01-07"),7)),"MMMMM dd,yyyy") as endday;

So, change the constant '23' with your column and 2018-12-28 with the end of the previous year that you need.

In a few words, the sql code makes this:

- takes the week:23, transforms it to seconds (23*7(days have a week)*24 hours*60mins*60seconds);

- transforms in seconds also the last day of last week of previous year (28-December-2018);

- addition the two sums and the result will be a date;

- having the date, we can calculate the first day of week (and last day, I know that you don't need it, but maybe someone will need it);

Now, in my example, because of my UTC, the first day of week is Sunday, not Monday as you expect!

Hope that it's what you need.

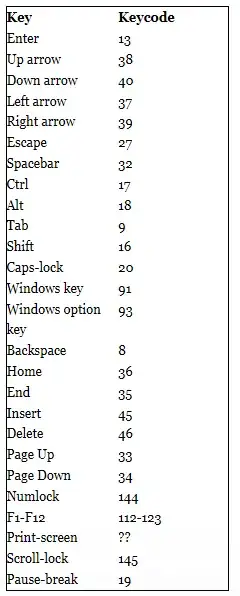

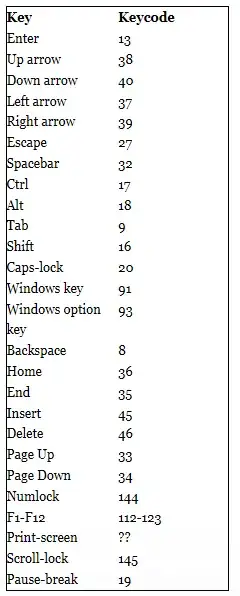

Results: