I'm writing a simple digital simulator for combinatory and sequential circuits and I've chosen a graph approach for modelling this problem.

If the circuit have no loops, I can represent it as a DAG. In this case, I can think of at least two ways to perform a simulation: recursively, going from the outputs backwards or toposorting the graph.

The problem comes when a loop exists as I can't sort nodes anymore, but since a circuit is a directed graph whose edges go from inputs to outputs in only one direction, loops are the only edges "going back".

Hence, with these restrictions, I was looking for a way to perform topological sort "ignoring loops" given the adjacency list.

Here's my idea: if I menage to sort the components regardless of loops, I can simply evaluate loop drivers first and then simulate like if no loops existed. This approach works fine when I solve logic circuits by hand.

In other words, I'd like to somewhat sort the graph pretending that back edges don't exist (hence I have a DAG). Is there a way to do this?

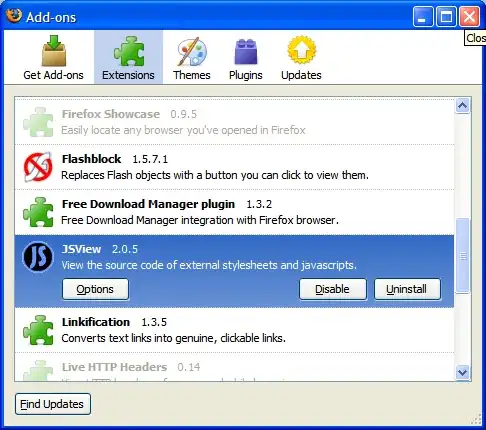

EDIT: take a look at the following picture.

Here's the adjacency list corresponding to that circuit:

Here's the adjacency list corresponding to that circuit:

n -> XNOR

m -> AND

AND -> NOT

NOT -> NAND

NAND -> D-FLIPFLOP

XNOR -> NAND

D-FLIPFLOP-> x, AND, XNOR

x ->

The toposort i'd like to obtain is (for example) this one:

m AND NOT n XNOR NAND D-FLIPFLOP x

ignoring the two loops

D-FLIPFLOP -> AND

D-FLIPFLOP -> XNOR