I have an excel sheet which contains more than 30 sheets for different parameters like BP, Heart rate etc.

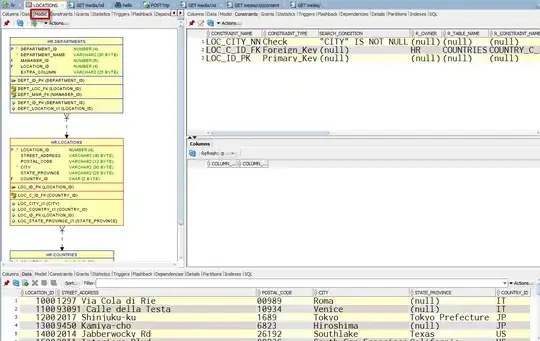

One of the dataframe (df1 - created from one sheet of excel) looks like as shown below

df1= pd.DataFrame({'person_id':[1,1,1,1,2,2,2,2,3,3,3,3,3,3],'level_1': ['H1Date','H1','H2Date','H2','H1Date','H1','H2Date','H2','H1Date','H1','H2Date','H2','H3Date','H3'],

'values': ['2006-10-30 00:00:00','6.6','2006-08-30 00:00:00','4.6','2005-10-30 00:00:00','6.9','2016-11-30 00:00:00','6.6','2006-10-30 00:00:00','6.6','2006-11-30 00:00:00','8.6',

'2106-10-30 00:00:00','16.6']})

Another dataframe (df2) from another sheet of excel file can be generated using the code below

df2= pd.DataFrame({'person_id':[1,1,1,1,2,2,2,2,3,3,3,3,3,3],'level_1': ['GluF1Date','GluF1','GluF2Date','GluF2','GluF1Date','GluF1','GluF2Date','GluF2','GluF1Date','GluF1','GluF2Date','GluF2','GluF3Date','GluF3'],

'values': ['2006-10-30 00:00:00','6.6','2006-08-30 00:00:00','4.6','2005-10-30 00:00:00','6.9','2016-11-30 00:00:00','6.6','2006-10-30 00:00:00','6.6','2006-11-30 00:00:00','8.6',

'2106-10-30 00:00:00','16.6']})

Similarly there are more than 30 dataframes like this with values of the same format (Date & measurement value) but column names (H1, GluF1, H1Date,H100,H100Date, GluF1Date,P1,PDate,UACRDate,UACR100, etc) are different

What I am trying to do based on SO search is as shown below

g = df1.level_1.str[-2:] # Extracting column names

df1['lvl'] = df1.level_1.apply(lambda x: int(''.join(filter(str.isdigit, x)))) # Extracting level's number

df1= df1.pivot_table(index=['person_id', 'lvl'], columns=g, values='values', aggfunc='first')

final = df1.reset_index(level=1).drop(['lvl'], axis=1)

The above code gives an output like this which is not expected

This doesn't work as g doesn't result in same string output (column names) for all records. My code would work if the substring extract has resulted in same output but since the data is like sequence, I am not able to make it uniform

I expect my output to be like as shown below for each dataframe. Please note that a person can have 3 records (H1..H3)/10 records (H1..H10) / 100 records (ex: H1...H100). It is all possible.

updated screenshot