I found a Python library for Laplacian Score Feature Selection. But the implementation is seemingly different from the research paper.

I implemented the selection method according to the algorithm from the paper (https://papers.nips.cc/paper/2909-laplacian-score-for-feature-selection.pdf), which is as follows:

However, I found a Python library that implements the Laplacian method (https://github.com/jundongl/scikit-feature/blob/master/skfeature/function/similarity_based/lap_score.py).

So to check that my implementation was correct, I ran both versions on my dataset, and got different answers. In the process of debugging, I saw that the library used different formulas when calculating the affinity matrix (The S matrix from the paper).

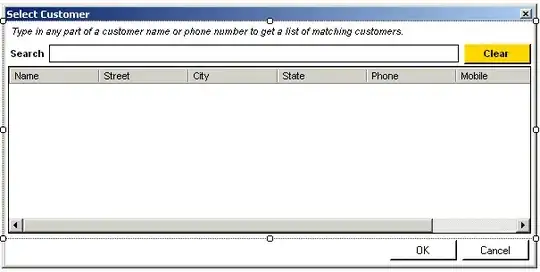

The paper uses this formula:

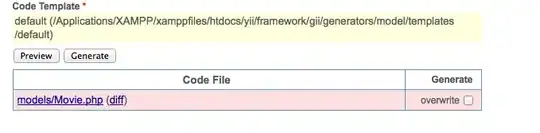

, While the library uses

W_ij = exp(-norm(x_i - x_j)/2t^2)

Further investigation revealed that the library calculates the affinity matrix as follows:

t = kwargs['t']

# compute pairwise euclidean distances

D = pairwise_distances(X)

D **= 2

# sort the distance matrix D in ascending order

dump = np.sort(D, axis=1)

idx = np.argsort(D, axis=1)

idx_new = idx[:, 0:k+1]

dump_new = dump[:, 0:k+1]

# compute the pairwise heat kernel distances

dump_heat_kernel = np.exp(-dump_new/(2*t*t))

G = np.zeros((n_samples*(k+1), 3))

G[:, 0] = np.tile(np.arange(n_samples), (k+1, 1)).reshape(-1)

G[:, 1] = np.ravel(idx_new, order='F')

G[:, 2] = np.ravel(dump_heat_kernel, order='F')

# build the sparse affinity matrix W

W = csc_matrix((G[:, 2], (G[:, 0], G[:, 1])), shape=

n_samples,n_samples))

bigger = np.transpose(W) > W

W = W - W.multiply(bigger) + np.transpose(W).multiply(bigger)

return W

I'm not sure why the library squares each value in the distance matrix. I see that they also do some reordering, and they use a different heat kernel formula.

So I'd just like to know if any of the resources (The paper or the library) are wrong, or if they're somehow equivalent, or if anyone knows why they differ.