I am working off of Apple's sample project related to using the ARMatteGenerator to generate a a MTLTexture that can be used as an occlusion matte in the people occlusion technology.

I would like to determine how I could run the generated matte through a CIFilter. In my code, I am "filtering" the matte like such;

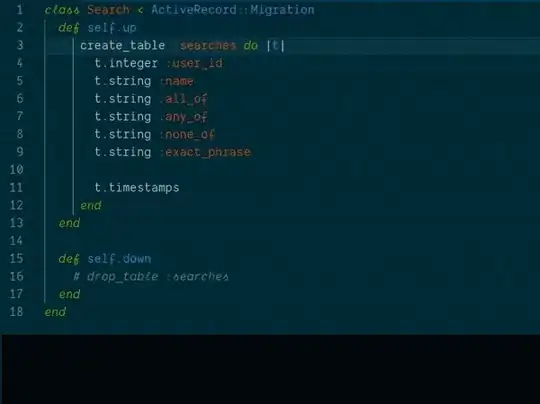

func updateMatteTextures(commandBuffer: MTLCommandBuffer) {

guard let currentFrame = session.currentFrame else {

return

}

var targetImage: CIImage?

alphaTexture = matteGenerator.generateMatte(from: currentFrame, commandBuffer: commandBuffer)

dilatedDepthTexture = matteGenerator.generateDilatedDepth(from: currentFrame, commandBuffer: commandBuffer)

targetImage = CIImage(mtlTexture: alphaTexture!, options: nil)

monoAlphaCIFilter?.setValue(targetImage!, forKey: kCIInputImageKey)

monoAlphaCIFilter?.setValue(CIColor.red, forKey: kCIInputColorKey)

targetImage = (monoAlphaCIFilter?.outputImage)!

let drawingBounds = CGRect(origin: .zero, size: CGSize(width: alphaTexture!.width, height: alphaTexture!.height))

context.render(targetImage!, to: alphaTexture!, commandBuffer: commandBuffer, bounds: drawingBounds, colorSpace: CGColorSpaceCreateDeviceRGB())

}

When I go to composite the matte texture and backgrounds, there is no filtering effect applied to the matte. This is how the textures are being composited;

func compositeImagesWithEncoder(renderEncoder: MTLRenderCommandEncoder) {

guard let textureY = capturedImageTextureY, let textureCbCr = capturedImageTextureCbCr else {

return

}

// Push a debug group allowing us to identify render commands in the GPU Frame Capture tool

renderEncoder.pushDebugGroup("CompositePass")

// Set render command encoder state

renderEncoder.setCullMode(.none)

renderEncoder.setRenderPipelineState(compositePipelineState)

renderEncoder.setDepthStencilState(compositeDepthState)

// Setup plane vertex buffers

renderEncoder.setVertexBuffer(imagePlaneVertexBuffer, offset: 0, index: 0)

renderEncoder.setVertexBuffer(scenePlaneVertexBuffer, offset: 0, index: 1)

// Setup textures for the composite fragment shader

renderEncoder.setFragmentBuffer(sharedUniformBuffer, offset: sharedUniformBufferOffset, index: Int(kBufferIndexSharedUniforms.rawValue))

renderEncoder.setFragmentTexture(CVMetalTextureGetTexture(textureY), index: 0)

renderEncoder.setFragmentTexture(CVMetalTextureGetTexture(textureCbCr), index: 1)

renderEncoder.setFragmentTexture(sceneColorTexture, index: 2)

renderEncoder.setFragmentTexture(sceneDepthTexture, index: 3)

renderEncoder.setFragmentTexture(alphaTexture, index: 4)

renderEncoder.setFragmentTexture(dilatedDepthTexture, index: 5)

// Draw final quad to display

renderEncoder.drawPrimitives(type: .triangleStrip, vertexStart: 0, vertexCount: 4)

renderEncoder.popDebugGroup()

}

How could I apply the CIFilter to only the alphaTexture generated by the ARMatteGenerator?