Docstring format

I use the numpy docstring convention as a basis. If a function's input parameter or return parameter is a pandas dataframe with predetermined columns, then I add a reStructuredText-style table with column descriptions to the parameter description. As an example:

def random_dataframe(no_rows):

"""Return dataframe with random data.

Parameters

----------

no_rows : int

Desired number of data rows.

Returns

-------

pd.DataFrame

Dataframe with with randomly selected values. Data columns are as follows:

========== ==============================================================

rand_int randomly chosen whole numbers (as `int`)

rand_float randomly chosen numbers with decimal parts (as `float`)

rand_color randomly chosen colors (as `str`)

rand_bird randomly chosen birds (as `str`)

========== ==============================================================

"""

df = pd.DataFrame({

"rand_int": np.random.randint(0, 100, no_rows),

"rand_float": np.random.rand(no_rows),

"rand_color": np.random.choice(['green', 'red', 'blue', 'yellow'], no_rows),

"rand_bird": np.random.choice(['kiwi', 'duck', 'owl', 'parrot'], no_rows),

})

return df

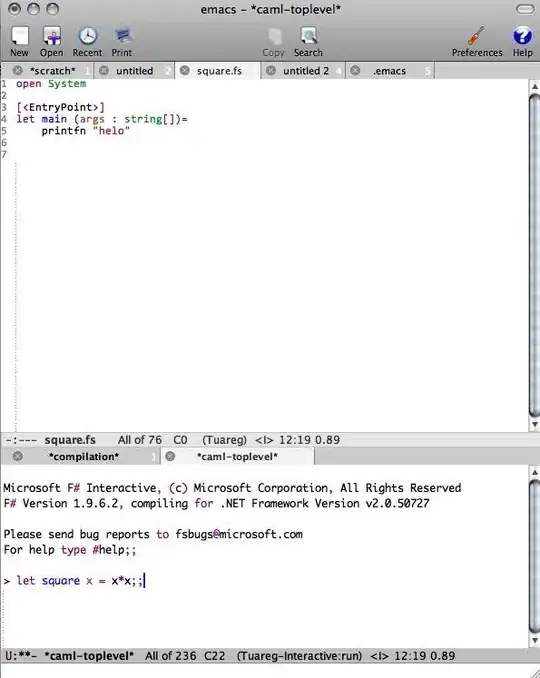

Bonus: sphinx compatibility

The aforementioned docstring format is compatible with the sphinx autodoc documentation generator. This is how the docstring looks like in HTML documentation that was automatically generated by sphinx (using the nature theme):