I have some data in dataframe which i have to convert to json and store it into Azure Blob Storage. Is there any way to achieve this? Below are the steps which i have tried. I am trying it from spark-shell.

val df = spark.sql("select * from historic_data.all_historic_data").show()

spark.conf.set("fs.azure.account.key.<STORAGE_ACCOUNT_NAME>.blob.core.windows.net","STORAGE_ACCOUNT_KEY")

df.write.mode(SaveMode.Append).json("wasbs://BlobStorageContainer@<STORAGE_ACCOUNT_NAME>.blob.core.windows.net/<FOLDER_PATH_OF BLOB>/")

While running the write command i am getting below error

org.apache.hadoop.fs.azure.AzureException: com.microsoft.azure.storage.StorageException: The specifed resource name contains invalid characters.

at org.apache.hadoop.fs.azure.AzureNativeFileSystemStore.retrieveMetadata(AzureNativeFileSystemStore.java:2208)

at org.apache.hadoop.fs.azure.NativeAzureFileSystem.getFileStatusInternal(NativeAzureFileSystem.java:2673)

at org.apache.hadoop.fs.azure.NativeAzureFileSystem.getFileStatus(NativeAzureFileSystem.java:2618)

at org.apache.hadoop.fs.FileSystem.exists(FileSystem.java:1448)

at org.apache.spark.sql.execution.datasources.InsertIntoHadoopFsRelationCommand.run(InsertIntoHadoopFsRelationCommand.scala:92

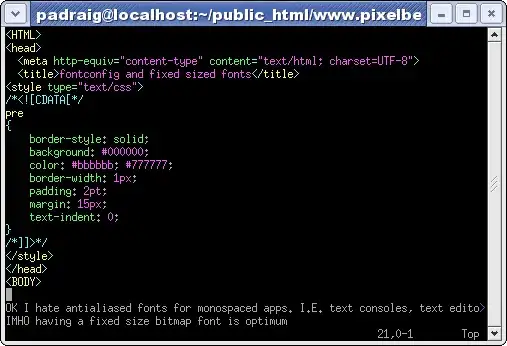

Is there anything i have missed while providing blob details? below is screen shot of my storage account :

I havent seen any similiar kind of question here which will WRITE from dataframe as Json into Azure Blob.