I use SQL Server 2005 as a data store for a lot of data I do analytic work on. This is not a transactional database as I am not hitting it with updates or capturing real time data. I get a few gigs of data from my clients, load them into SQL Server and do a series of manipulations. I then grab bits of this data and pull them into R where I do most of my analysis. I then push a little data into tables in SQL Server and maybe do a join or two.

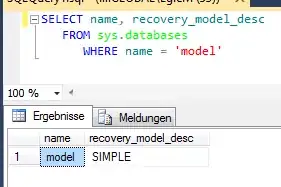

I have a heck of a time with the logs in SQL Server getting big and I assume it takes some degree of overhead to create these. How can I configure SQL Server so that it runs with little or no logging? If things get corrupt I am happy to just start from the beginning. Any ideas how to make this all faster?

BTW, no need to tell me how to shrink logs, I'm already doing that. But I wish I didn't have to make the logs in the first place. I'm only using the DB to house the data because its too big to fit into memory in R.

Should I be using a more simple DB than Sql Server? Feel free to tell me I am killing an ant with a sledgehammer. But please recommend a more appropriate sized hammer. :)