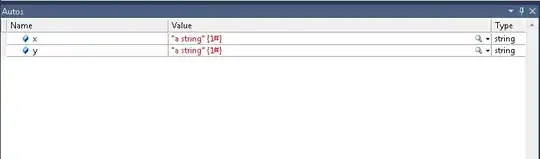

We have BlueData 3.7 running and I started the Cloudera 5.14 cluster with Spark and YARN. I get a csv file from Qumulo per NFS per DTAP into the Spark container and just do a small filter and save the outcome as parquet file per DTAP into our external HDFS Cloudera Cluster. Everything works BUT the write of the file to the external HDFS cluster. I can totally read per DTAP from the HDFS and write per DTAP to the Qumulo NFS. Just the write into the HDFS per DTAP doesn’t work. I get the message that my user which is in the AD group of EPIC has no permission to write (as you see on the following picture).

Any idea why that is? The DTAP to the HDFS is NOT configured as read-only. So I expected it to be read and write.

Note:

- I already checked the access rights in Cloudera.

- I checked the AD credentials in the BD cluster.

- I can read with these credentials from HDFS.

Here is my code:

$ pyspark --master yarn --deploy-mode client --packages com.databricks:spark-csv_2.10:1.4.0

>>> from pyspark.sql import SQLContext

>>> sqlContext = SQLContext(sc)

>>> df = sqlContext.read.format('com.databricks.spark.csv').options(header='true', inferschema='true').load('dtap://TenantStorage/file.csv')

>>> df.take(1)

>>> df_filtered = df.filter(df.incidents_85_99 == 0)

>>> df_filtered.write.parquet('dtap://OtherDataTap/airline-safety_zero_incidents.parquet')

error message:

hdfs_access_control_exception: premission denied